What's new

Here you'll find curated collection of our most insightful and engaging blog content, neatly organized into series for your convenience. Each series focuses on a unique theme or topic providing deep dive subject.- Practice 50 React Coding Interview Questions with SolutionsPractice 50 React coding interview questions with solutions. Essential for front end developers aiming to excel in their 2025 job interviewsAuthorNitesh Seram6 min readJan 30, 2025

React has become the go-to library for building modern web applications, and mastering it requires more than just reading documentation—you need hands-on practice. Whether you're a beginner or an experienced developer, coding questions can help sharpen your React skills and reinforce core concepts.

At our platform, we offer a curated list of interactive React coding questions designed to test your UI development skills. These questions range from simple UI components to complex interactive elements, all helping you level up as a React developer.

Why Practice React Coding Questions?

-

Improve Problem-Solving Skills - Tackle real-world UI problems and gain practical experience

-

Strengthen Component-Based Thinking - Learn how to structure and optimize React components

-

Master State Management - Work with React's state and hooks in practical scenarios

-

Build a Strong UI Foundation - Create reusable and accessible UI components

-

Prepare for Job Interviews - Many of these questions reflect common React interview UI questions

Here's a sneak peek at some of the most popular React coding questions available on our platform:

Beginner-Friendly Questions

1. Accordion

Build an accordion component that a displays a list of vertically stacked sections with each containing a title and content snippet.

2. Contact Form

Build a contact form which submits user feedback and contact details to a back end API.

3. Holy Grail

Build the famous holy grail layout consisting of a header, 3 columns, and a footer.

4. Progress Bars

Build a list of progress bars that fill up gradually when they are added to the page.

5. Mortgage Calculator

Build a calculator that computes the monthly mortgage for a loan.

6. Flight Booker

Build a component that books a flight for specified dates.

7. Generate Table

Generate a table of numbers given the rows and columns.

8. Progress Bar

Build a progress bar component that shows the percentage completion of an operation.

9. Temperature Converter

Build a temperature converter widget that converts temperature values between Celsius and Fahrenheit.

10. Tweet

Build a component that resembles a Tweet from Twitter.

Intermediate Questions

11. Tabs

Build a tabs component that displays a list of tab elements and one associated panel of content at a time.

12. Data Table

Build a users data table with pagination features.

13. Dice Roller

Build a dice roller app that simulates the results of rolling 6-sided dice.

14. File Explorer

Build a file explorer component to navigate files and directories in a tree-like hierarchical viewer.

15. Like Button

Build a Like button that changes appearance based on the states.

16. Modal Dialog

Build a reusable modal dialog component that can be opened and closed.

17. Star Rating

Build a star rating component that shows a row of star icons for users to select the number of filled stars corresponding to the rating.

18. Todo List

Build a Todo list that lets users add new tasks and delete existing tasks.

19. Traffic Light

Build a traffic light where the lights switch from green to yellow to red after predetermined intervals and loop indefinitely.

20. Digital Clock

Build a 7-segment digital clock that shows the current time.

21. Tic-tac-toe

Build a tic-tac-toe game that is playable by two players.

22. Image Carousel

Build an image carousel that displays a sequence of images.

23. Job Board

Build a job board that displays the latest job postings from Hacker News.

24. Stopwatch

Build a stopwatch widget that can measure how much time has passed.

25. Transfer List

Build a component that allows transferring of items between two lists.

26. Accordion II

Build an accessible accordion component that has the right ARIA roles, states, and properties.

27. Accordion III

Build a fully accessible accordion component that has keyboard support according to ARIA specifications.

28. Analog Clock

Build an analog clock where the hands update and move like a real clock.

29. Data Table II

Build a users data table with sorting features.

30. File Explorer II

Build a semi-accessible file explorer component that has the right ARIA roles, states, and properties.

31. File Explorer III

Build a file explorer component using a flat DOM structure.

32. Grid Lights

Build a grid of lights where the lights deactivate in the reverse order they were activated.

33. Modal Dialog II

Build a semi-accessible modal dialog component that has the right ARIA roles, states, and properties.

34. Modal Dialog III

Build a moderately-accessible modal dialog component that supports common ways to close the dialog.

35. Progress Bars II

Build a list of progress bars that fill up gradually in sequence, one at a time.

36. Tabs II

Build a semi-accessible tabs component that has the right ARIA roles, states, and properties.

37. Tabs III

Build a fully accessible tabs component that has keyboard support according to ARIA specifications.

38. Progress Bars III

Build a list of progress bars that fill up gradually concurrently, up to a limit of 3.

39. Birth Year Histogram

Build a widget that fetches birth year data from an API and plot it on a histogram.

40. Connect Four

Build a game for two players who take turns to drop colored discs from the top into a vertically suspended board/grid.

41. Image Carousel II

Build an image carousel that smoothly transitions between images.

Advanced Questions

42. Nested Checkboxes

Build a nested checkboxes component with parent-child selection logic.

43. Auth Code Input

Build an auth code input component that allows users to enter a 6-digit authorization code.

44. Progress Bars IV

Build a list of progress bars that fill up gradually concurrently, up to a limit of 3 and allows for pausing and resuming.

45. Data Table III

Build a generalized data table with pagination and sorting features.

46. Modal Dialog IV

Build a fully-accessible modal dialog component that supports all required keyboard interactions.

47. Selectable Cells

Build an interface where users can drag to select multiple cells within a grid.

48. Wordle

Build Wordle, the word-guessing game that took the world by storm.

49. Tic-tac-toe II

Build an N x N tic-tac-toe game that requires M consecutive marks to win.

50. Image Carousel III

Build an image carousel that smoothly transitions between images that has a minimal DOM footprint.

-

- Top 30 React js Interview Questions and Answers to Get Hired in 202530 Essential React Interview Questions and Answers to Help You Prepare for 2025 Front-End Job InterviewsAuthorNitesh Seram16 min readJan 29, 2025

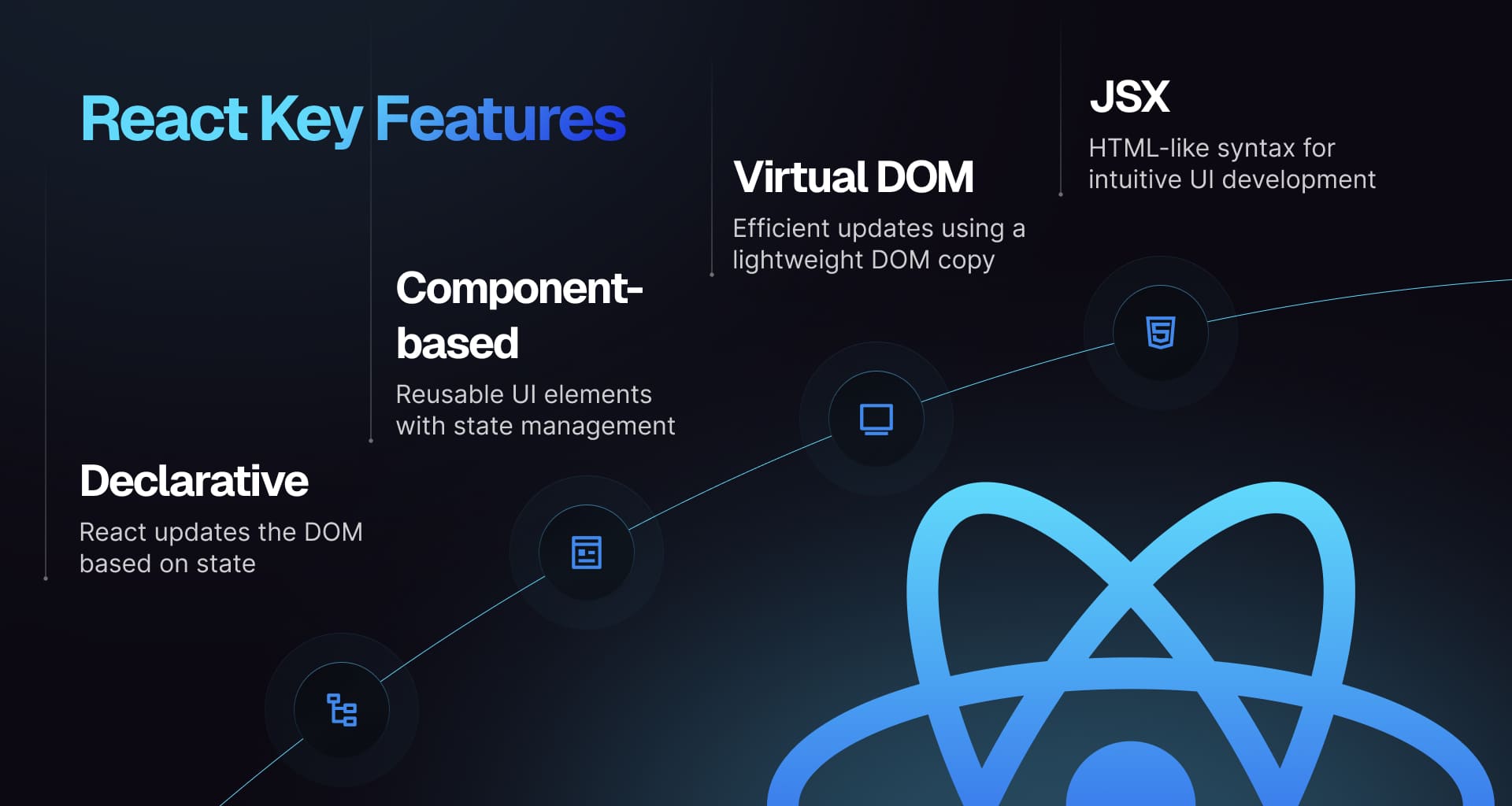

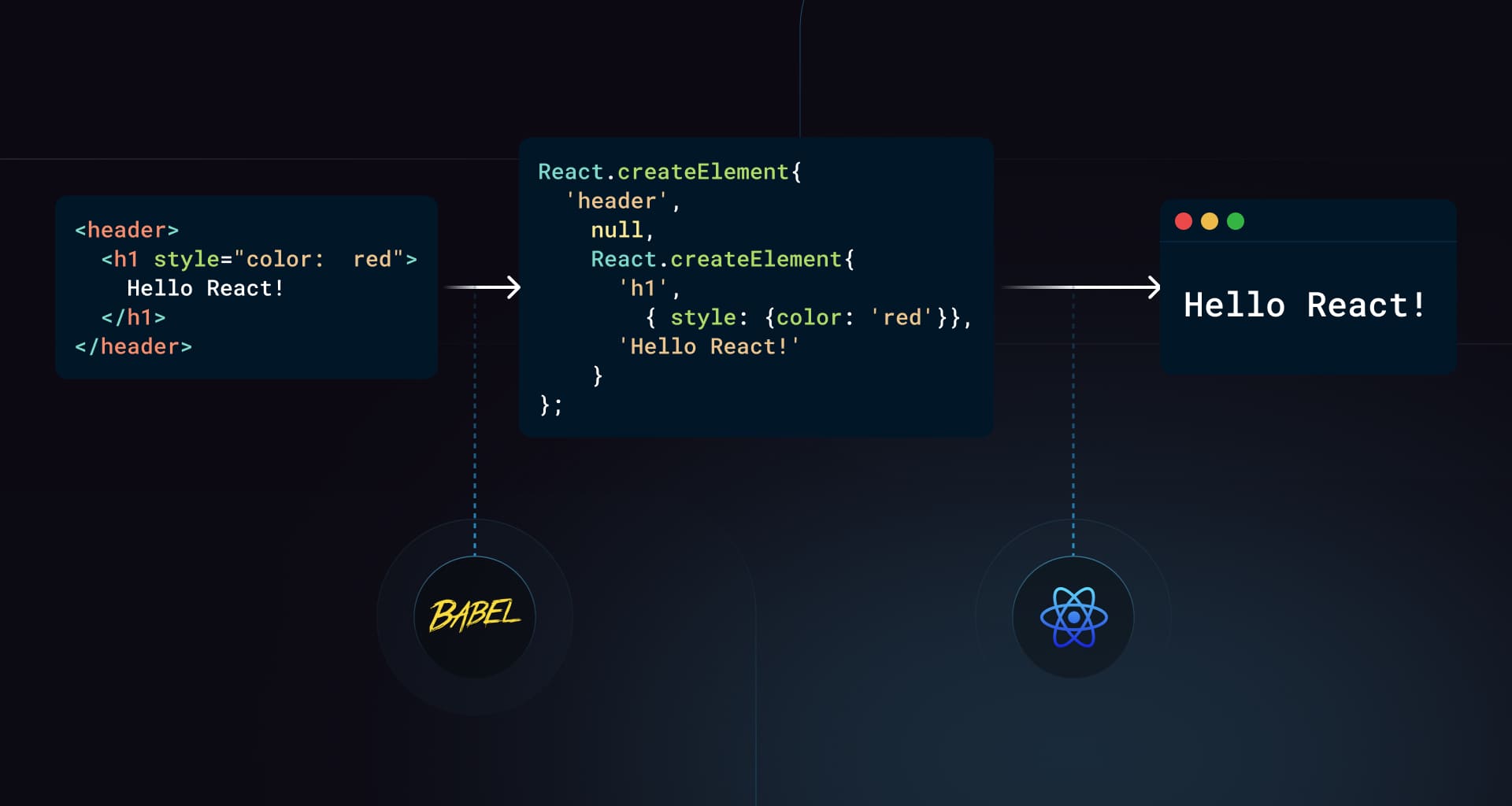

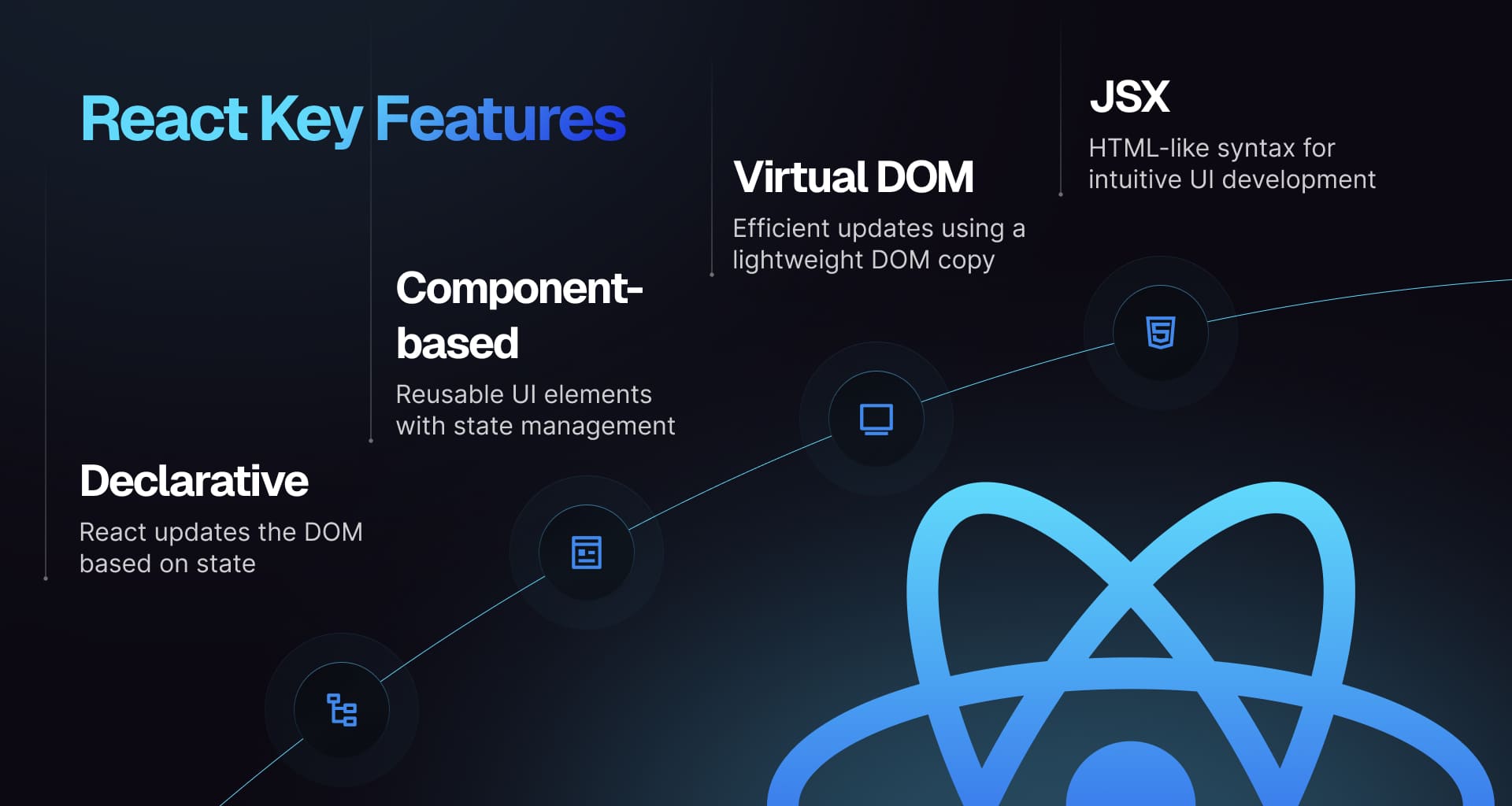

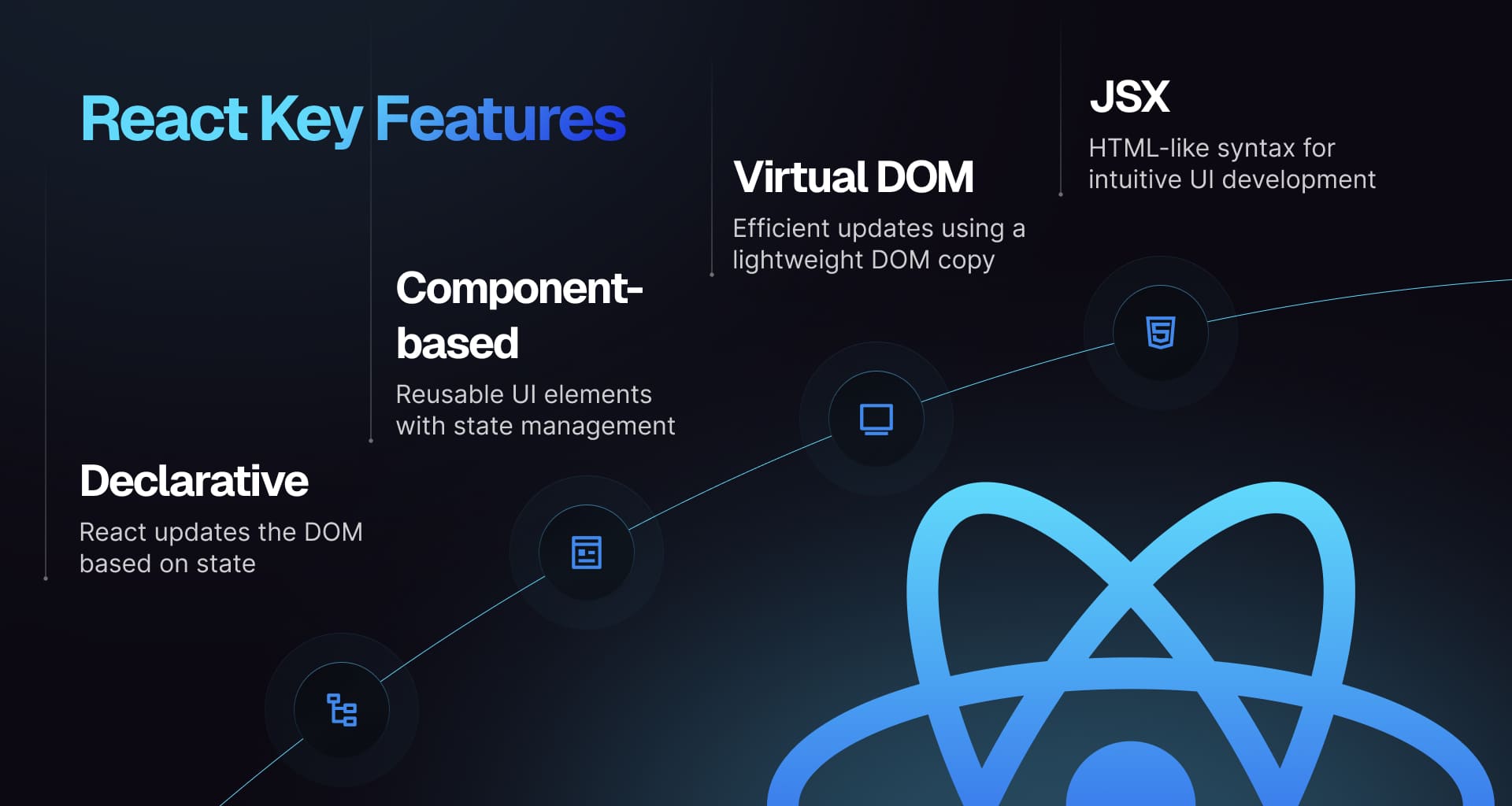

As the demand for skilled front-end developers rises in 2025, proficiency in React has become essential. This JavaScript library is widely used for building dynamic user interfaces, and mastering it requires a solid understanding of its core principles and advanced features.

To help you prepare for your upcoming interviews, we've compiled 30 essential React interview questions and answers that cover a range of topics from fundamental concepts to advanced techniques. Whether you're an experienced developer or new to React, this guide will equip you with the knowledge needed to excel in interviews.

1. What is the difference between React Node, Element, and Component?

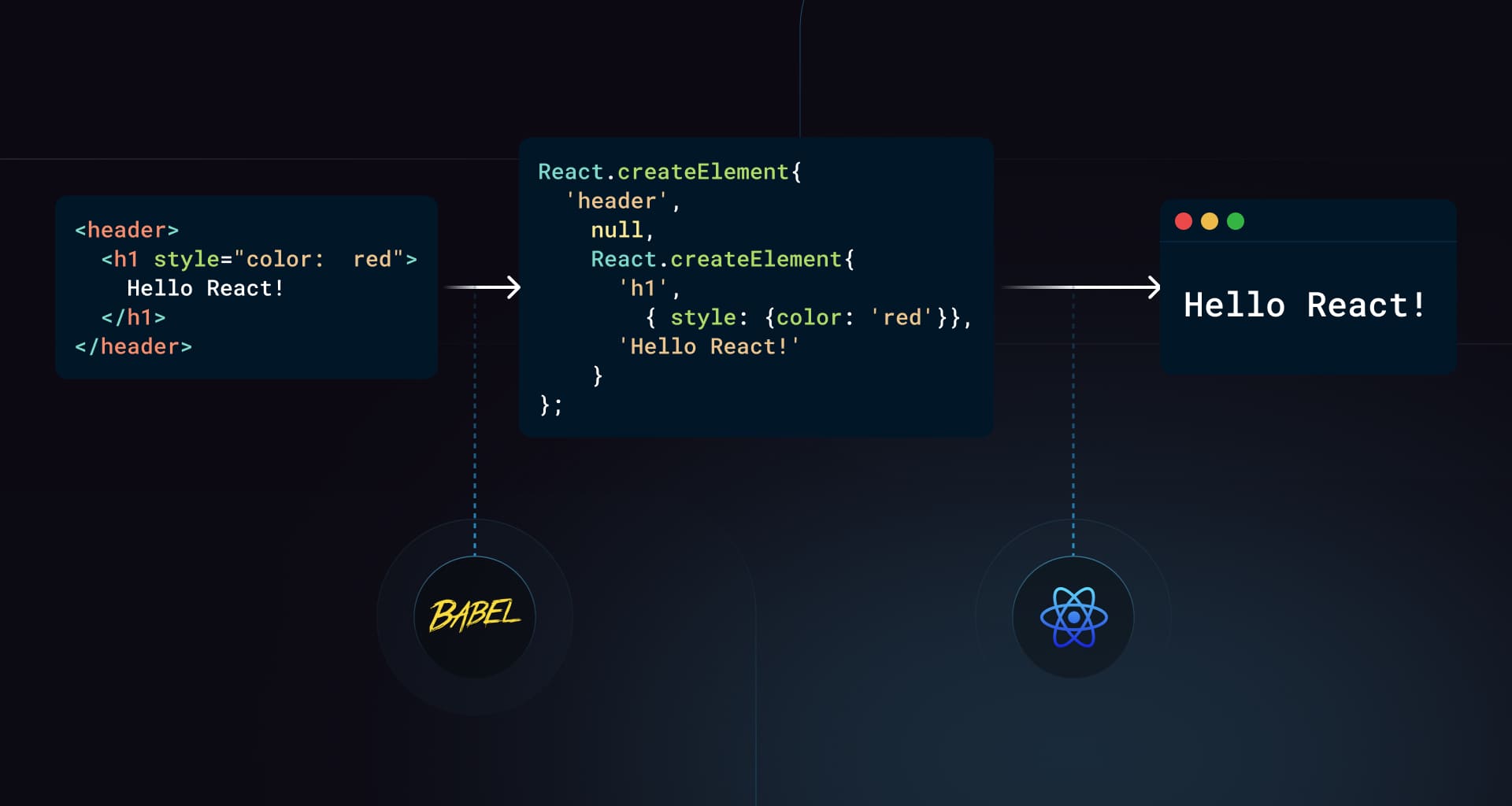

React Node, Element, and Component are three fundamental concepts in React.

-

Node: A React Node is any renderable unit in React, like an element, string, number, or

null. -

Element: An element is a plain object that represents a DOM element or a component. It describes what you want to see on the screen. Elements are immutable and are used to create React components.

-

Component: A component is a reusable piece of UI that can contain one or more elements. Components can be either functional or class-based. They accept inputs called props and return React elements that describe what should appear on the screen.

2. What are React Fragments used for?

React Fragments are a feature introduced in React 16.2 that allows you to group multiple children elements without adding extra nodes to the DOM. Fragments are useful when you need to return multiple elements from a component but don't want to wrap them in a parent element. They help keep the DOM structure clean and avoid unnecessary div wrappers.

Here's an example of using React Fragments:

function App() {return (<><Header /><Main /><Footer /></>);}In this example, the

<>...</>syntax is a shorthand for declaring a React Fragment. It allows you to group theHeader,Main, andFootercomponents without adding an extra div to the DOM.3. What is the purpose of the

keyprop in React?The

keyprop is a special attribute used in React to uniquely identify elements in a list. When rendering a list of elements, React uses thekeyprop to keep track of each element's identity and optimize the rendering process. Thekeyprop helps React identify which items have changed, been added, or been removed, allowing it to update the DOM efficiently.4. What is the consequence of using array indices as keys in React?

Using array indices as keys in React can lead to performance issues and unexpected behavior. When you use array indices as keys, React uses the index to identify elements and track changes. However, this approach can cause problems when the array is modified, as React may not be able to differentiate between elements with the same key.

For example, consider the following code:

function App() {const items = ['A', 'B', 'C'];return (<ul>{items.map((item, index) => (<li key={index}>{item}</li>))}</ul>);}If you add or remove items from the

itemsarray, React may not update the DOM correctly because it relies on the index as the key. To avoid this issue, it's recommended to use unique IDs or keys that are stable across renders.5. What is the difference between Controlled and Uncontrolled React components?

Controlled and Uncontrolled components are two common patterns used in React to manage form inputs and state.

-

Controlled Components: In a controlled component, form data is handled by React state and is updated via state changes. The input value is controlled by React, and any changes to the input are handled by React event handlers. Controlled components provide more control over form inputs and allow you to validate and manipulate the input data before updating the state.

-

Uncontrolled Components: In an uncontrolled component, form data is handled by the DOM itself, and React does not control the input value. The input value is managed by the DOM, and you can access the input value using a ref. Uncontrolled components are useful for integrating with third-party libraries or when you need to access the input value imperatively.

Example of controlled component:

function ControlledInput() {const [value, setValue] = React.useState('');return (<inputtype="text"value={value}onChange={(e) => setValue(e.target.value)}/>);}Example of uncontrolled component:

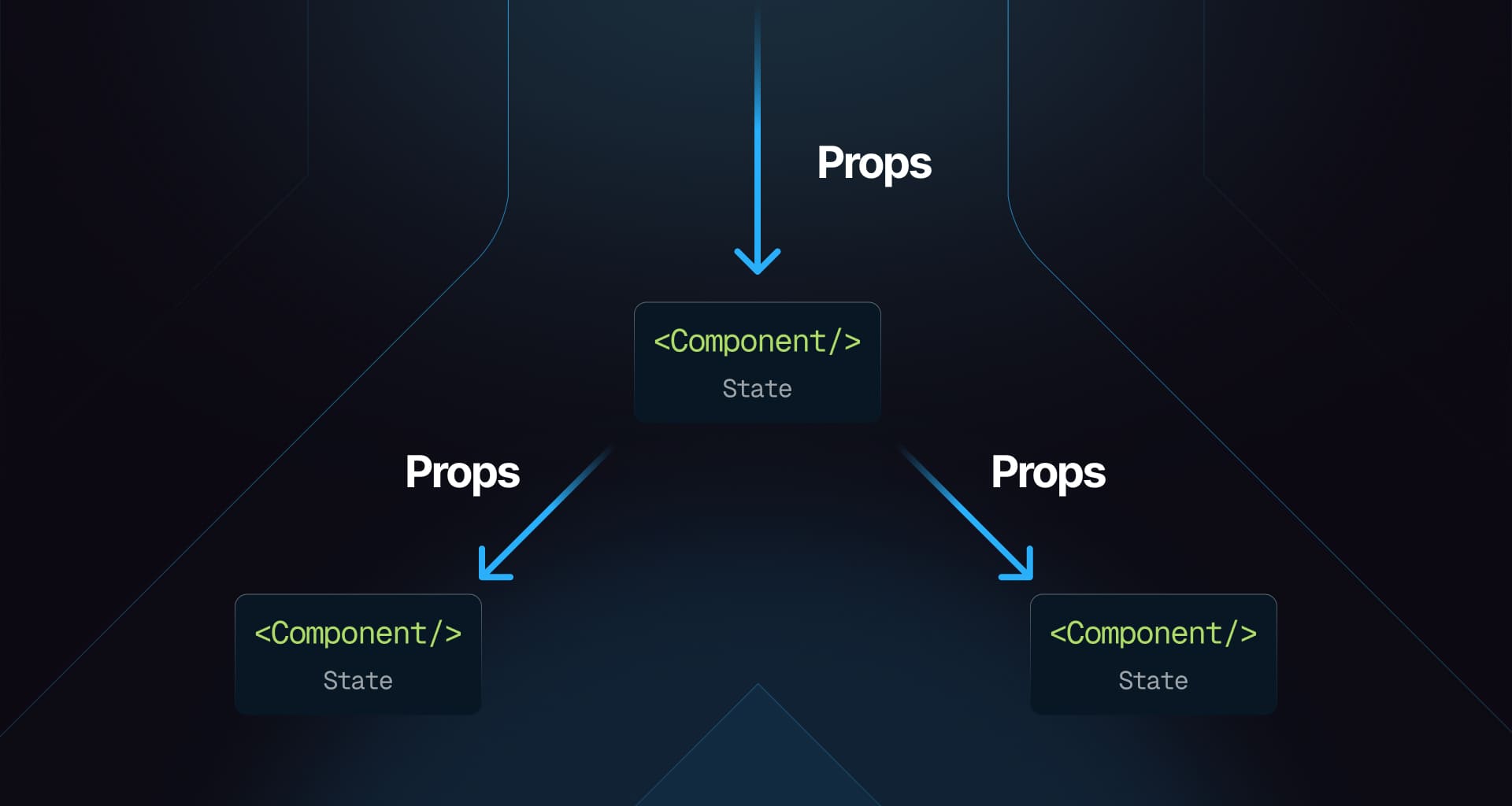

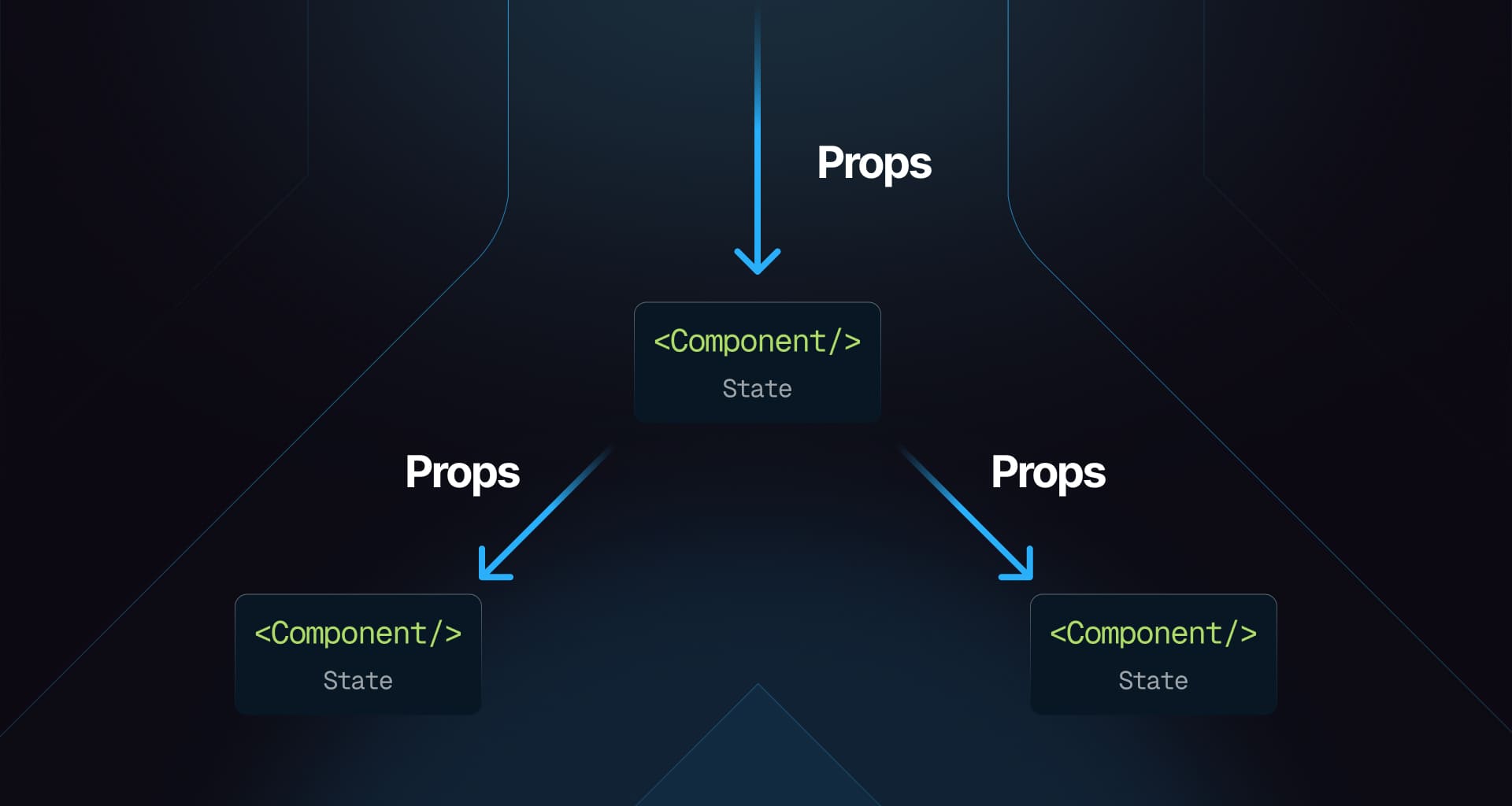

function UncontrolledInput() {const inputRef = React.useRef();return <input type="text" ref={inputRef} />;}6. How would you lift the state up in a React application, and why is it necessary?

Lifting state up in React involves moving the state from child components to their nearest common ancestor. This pattern is used to share state between components that don't have a direct parent-child relationship. By lifting state up, you can avoid prop drilling and simplify the management of shared data. Example:

const Parent = () => {const [counter, setCounter] = useState(0);return (<div><Child1 counter={counter} /><Child2 setCounter={setCounter} /></div>);};const Child1 = ({ counter }) => <h1>{counter}</h1>;const Child2 = ({ setCounter }) => (<button onClick={() => setCounter((prev) => prev + 1)}>Increment</button>);7. What are Pure Components?

Pure Components are a type of React component that extends

React.PureComponentor uses theReact.memohigher-order component. Pure Components are optimized for performance and implement ashouldComponentUpdatemethod that performs a shallow comparison of props and state to determine if the component should re-render. If the props and state of a Pure Component have not changed, React skips the re-rendering process, improving performance.Pure Components are useful when you have components that render the same output given the same input and don't rely on external state or side effects. By using Pure Components, you can prevent unnecessary re-renders and optimize your React application.

8. What is the difference between

createElementandcloneElement?createElement: Used to create a new React element by specifying its type (e.g., 'div', a React component), props, and children.

React.createElement('div', { className: 'container' }, 'Hello World');cloneElement: Used to clone an existing React element and optionally modify its props while keeping the original element's children and state.

const element = <button className="btn">Click Me</button>;const clonedElement = React.cloneElement(element, { className: 'btn-primary' });9. What is the role of PropTypes in React?

PropTypes is a library used in React to validate the props passed to a component. PropTypes help you define the types of props a component expects and provide warnings in the console if the props are of the wrong type. PropTypes are useful for documenting component APIs, catching bugs early, and ensuring that components receive the correct data.

Here's an example of using PropTypes:

import PropTypes from 'prop-types';const Greeting = ({ name }) => <h1>Hello, {name}!</h1>;Greeting.propTypes = {name: PropTypes.string.isRequired,};In this example, the

Greetingcomponent expects a prop namednameof typestring. If thenameprop is not provided or is not a string, a warning will be displayed in the console.10. What are stateless components?

Stateless components do not manage internal state; they receive data via props and focus solely on rendering UI based on that data.

Example:

function StatelessComponent({ message }) {return <div>{message}</div>;}11. What are stateful components?

Stateful components manage their own internal state and can update their UI based on user interactions or other events.

Example:

function StatefulComponent() {const [count, setCount] = React.useState(0);return (<div><p>{count}</p><button onClick={() => setCount(count + 1)}>Increment</button></div>);}12. What are the benefits of using hooks in React?

Hooks allow you to use state and other React features in functional components, eliminating the need for classes. They simplify code by reducing reliance on lifecycle methods, improve code readability, and make it easier to reuse stateful logic across components. Common hooks like

useStateanduseEffecthelp manage state and side effects.13. What are the rules of React hooks?

React hooks follow a set of rules to ensure they are used correctly:

-

Only call hooks at the top level: Hooks should only be called from the top level of a functional component or from custom hooks. They should not be called inside loops, conditions, or nested functions.

-

Only call hooks from React functions: Hooks should only be called from React components or custom hooks. They should not be called from regular JavaScript functions.

-

Use hooks in the same order: Hooks should be called in the same order on every render to ensure the component's state is consistent.

-

Don't call hooks conditionally: Hooks should not be called conditionally based on a condition. They should always be called in the same order on every render.

14. What is the difference between

useEffectanduseLayoutEffectin React?-

useEffect: Runs after the browser has painted the screen and is used for side effects that don't block the browser's painting process. It's asynchronous and runs after the render is committed to the DOM. -

useLayoutEffect: Runs synchronously after the DOM has been updated but before the browser has painted the screen. It's used for side effects that require the DOM to be updated synchronously.

In most cases, you should use

useEffectunless you need to perform a synchronous side effect that requires the DOM to be updated immediately.Example:

import React, { useEffect, useLayoutEffect, useRef } from 'react';function Example() {const ref = useRef();useEffect(() => {console.log('useEffect: Runs after DOM paint');});useLayoutEffect(() => {console.log('useLayoutEffect: Runs before DOM paint');console.log('Element width:', ref.current.offsetWidth);});return <div ref={ref}>Hello</div>;}15. What does the dependency array of

useEffectaffect?The dependency array of

useEffectspecifies the values that the effect depends on. When the values in the dependency array change, the effect is re-run. If the dependency array is empty, the effect runs only once after the initial render.Example:

useEffect(() => {// Effect code}, [value1, value2]);In this example, the effect will run whenever

value1orvalue2changes. If the dependency array is omitted ([]), the effect will run only once after the initial render.16. What is the

useRefhook in React and when should it be used?The

useRefhook in React creates a mutable reference that persists across renders. It can be used to store references to DOM elements, manage focus, or store mutable values that don't trigger re-renders.Example:

function TextInputWithFocusButton() {const inputRef = useRef(null);const handleClick = () => {inputRef.current.focus();};return (<><input ref={inputRef} type="text" /><button onClick={handleClick}>Focus Input</button></>);}In this example, the

inputRefis used to store a reference to the input element, and thehandleClickfunction focuses the input when the button is clicked.17. Why does React recommend against mutating state?

React recommends against mutating state directly because it can lead to unexpected behavior and bugs. When you mutate state directly, React may not detect the changes, causing the UI to become out of sync with the application's state. To ensure that React detects state changes correctly, you should always update state using the

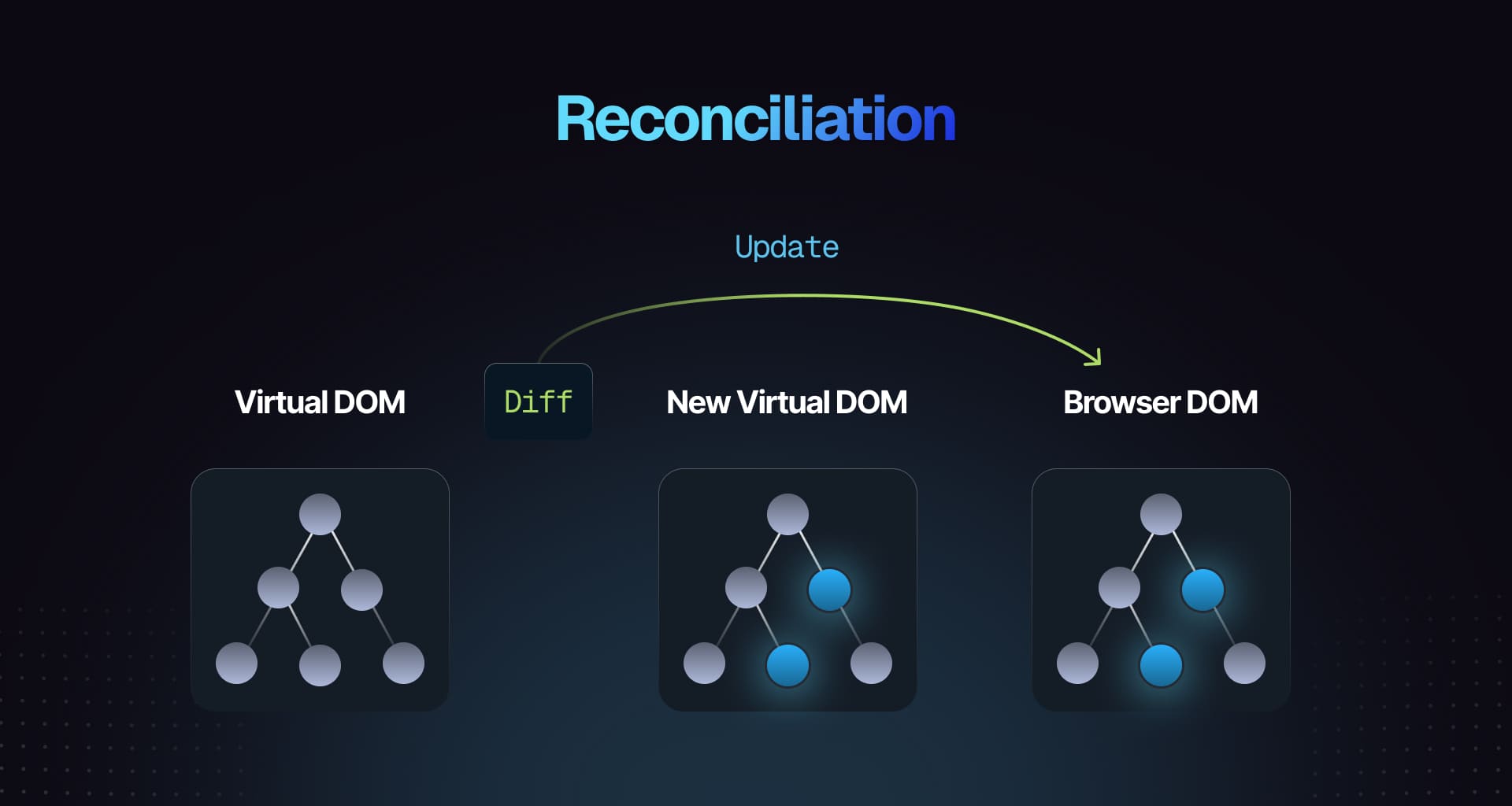

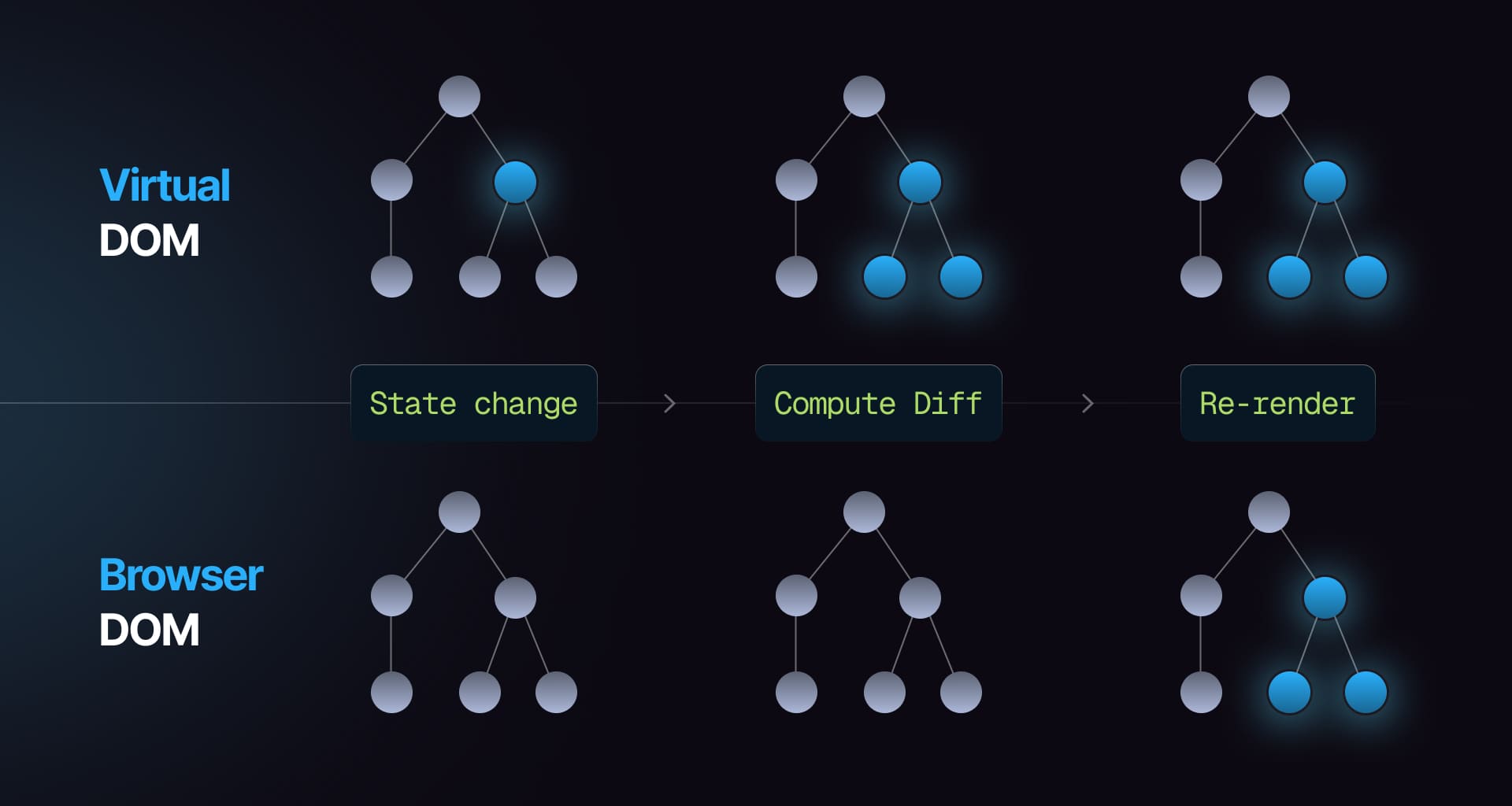

setStatefunction or hooks likeuseState.18. What is reconciliation in React?

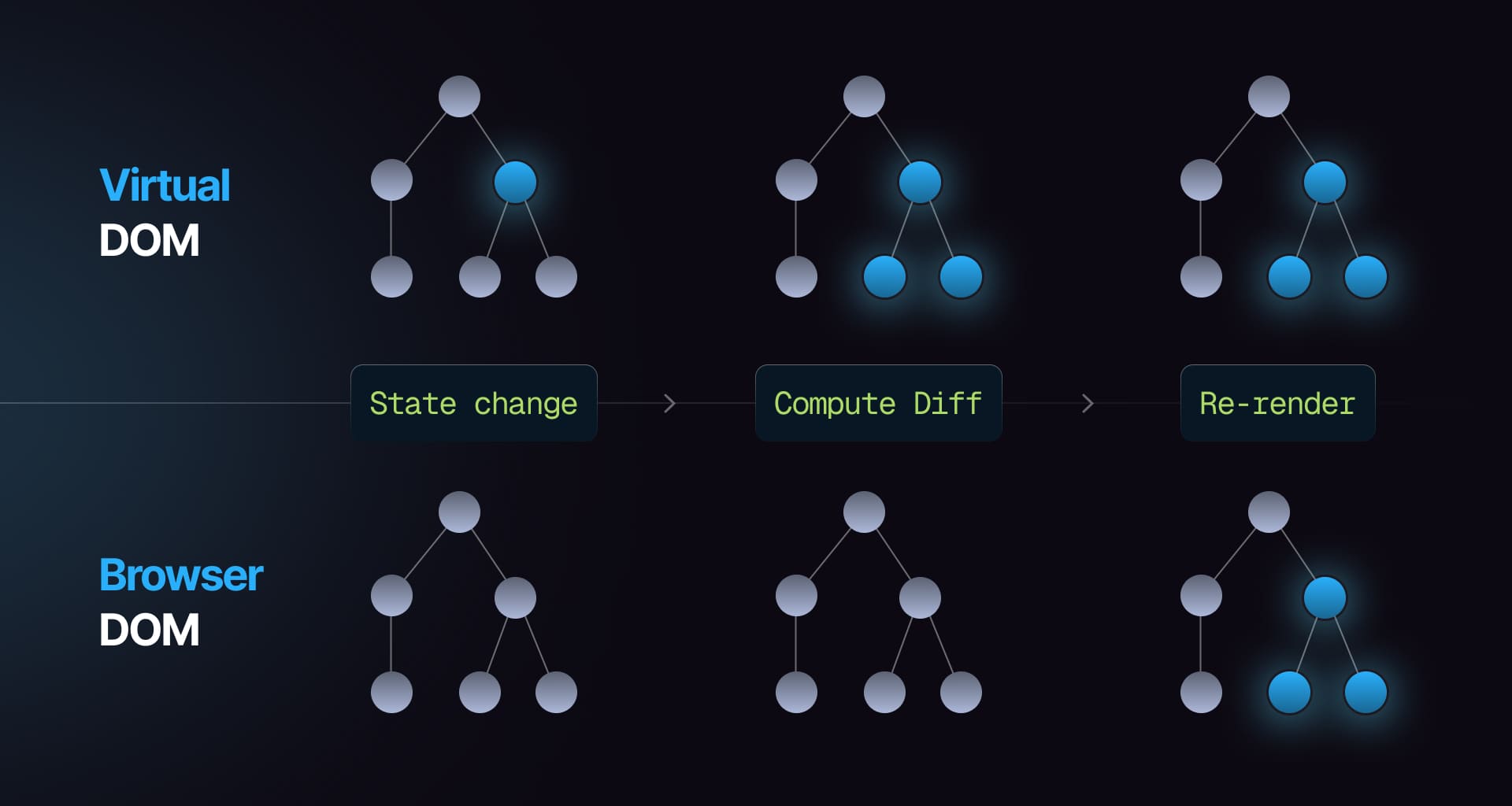

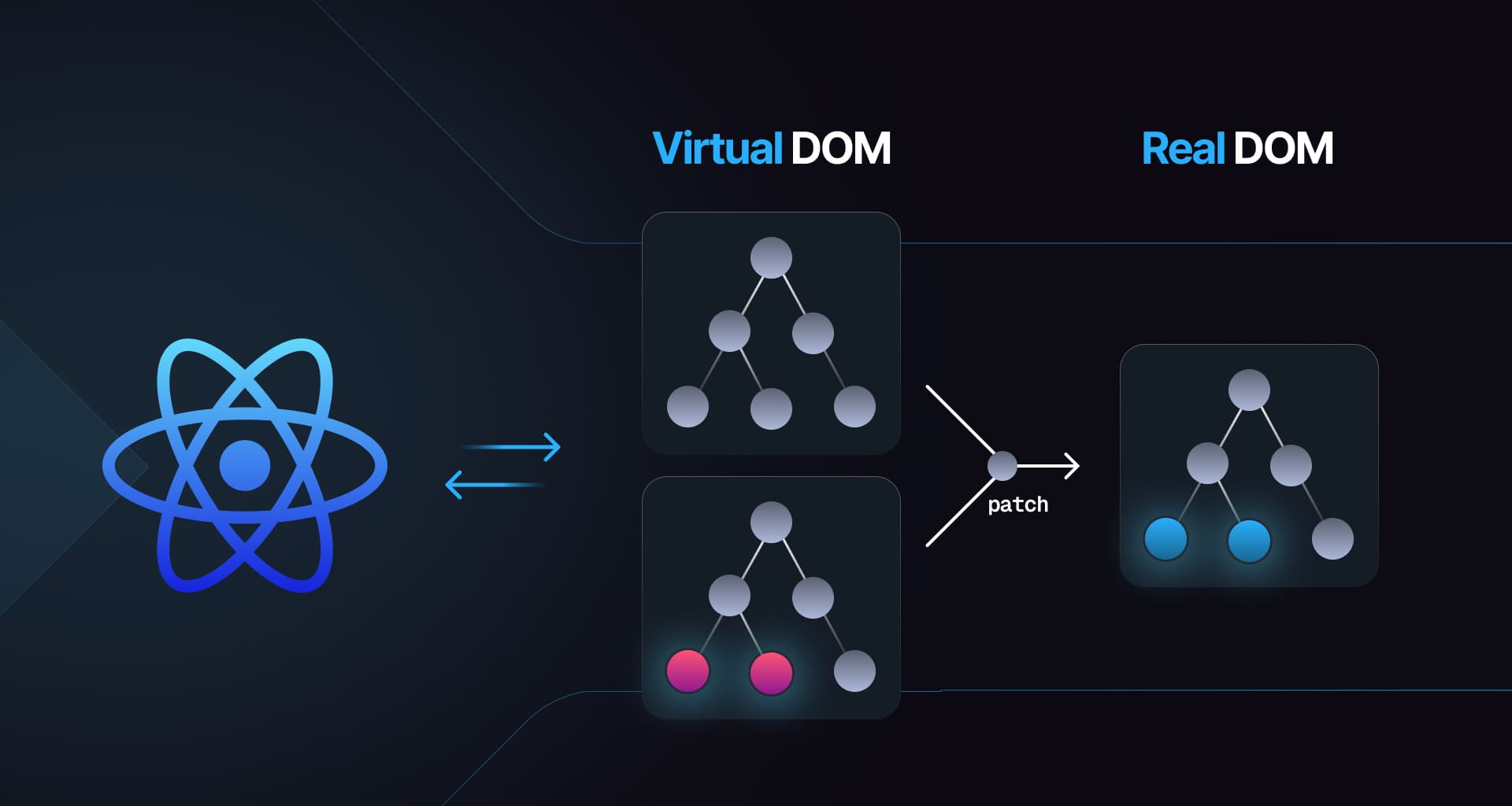

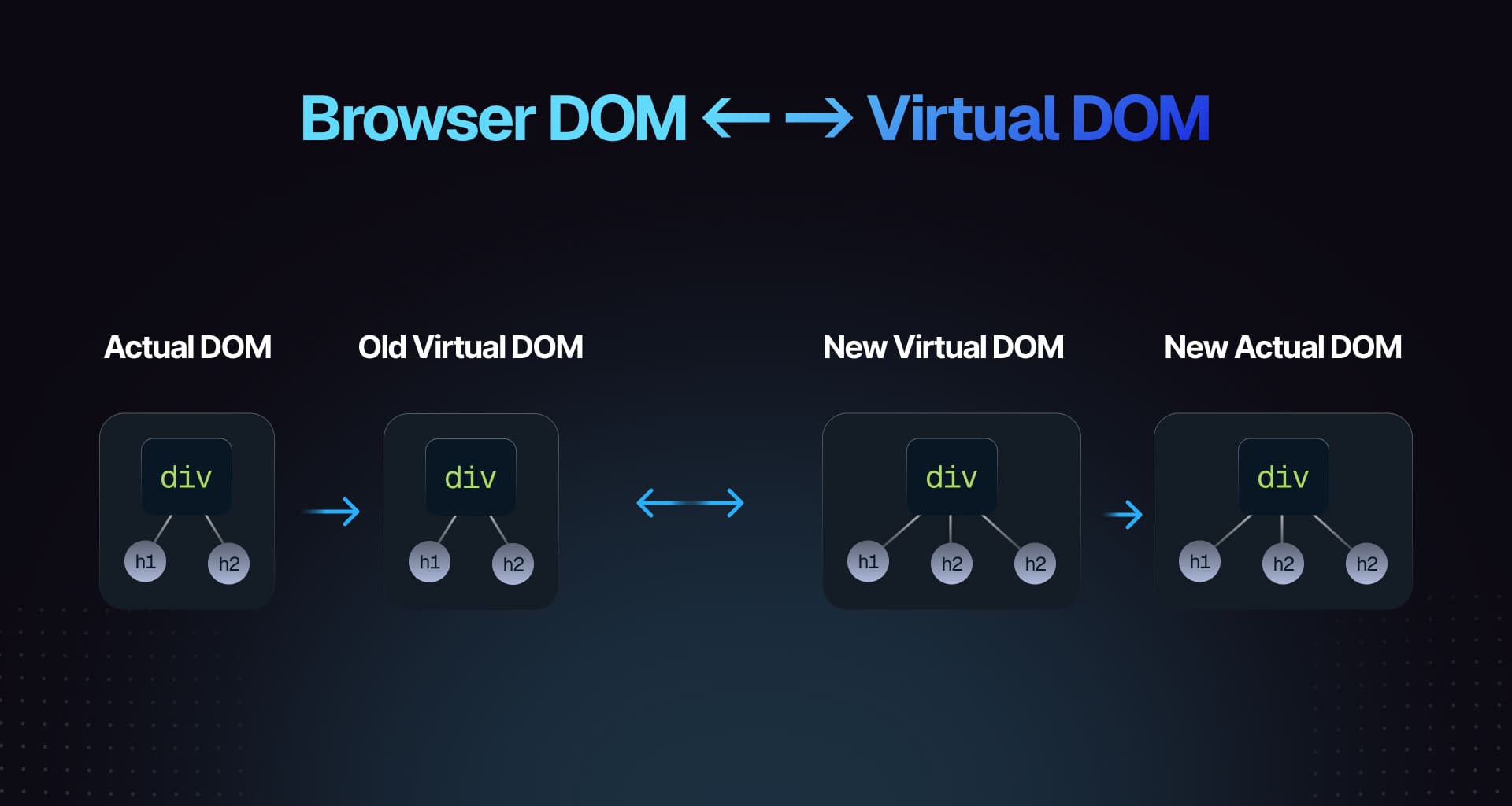

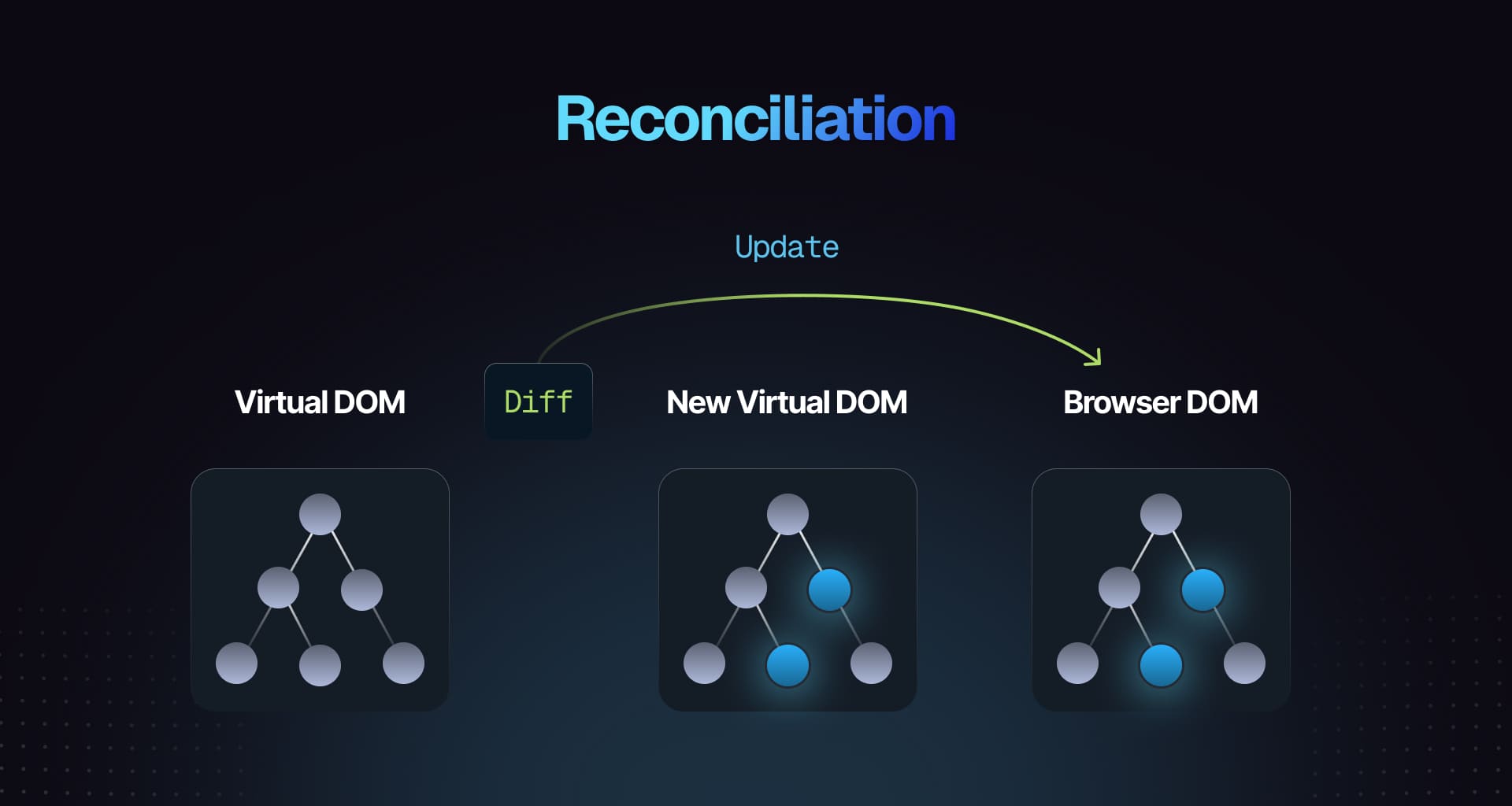

Reconciliation is the process by which React updates the DOM to match the virtual DOM after a component's state or props change. React compares the previous virtual DOM with the new virtual DOM and determines the minimum number of changes needed to update the DOM efficiently. Reconciliation is an essential part of React's performance optimization strategy and helps minimize the number of DOM manipulations required.

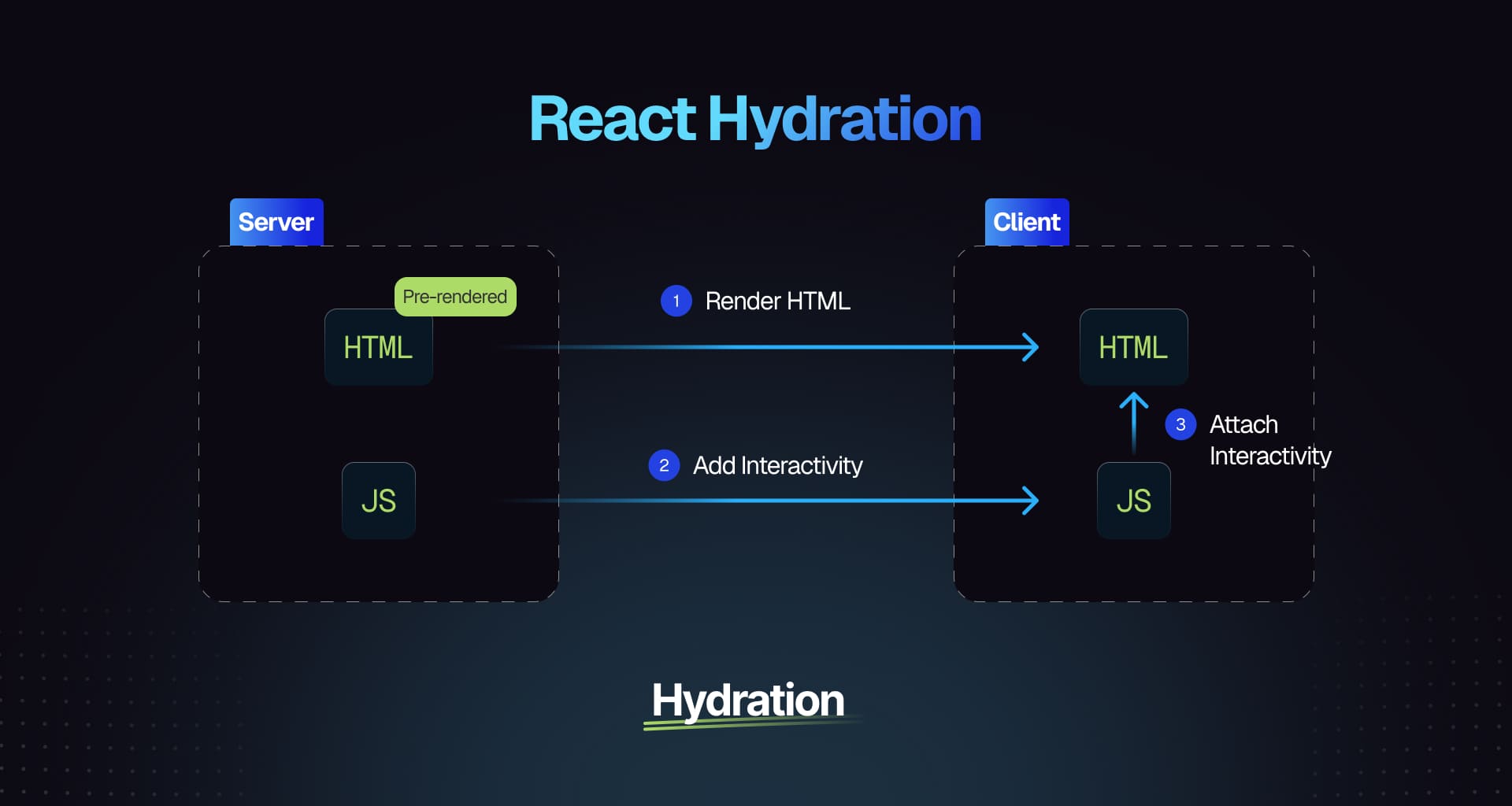

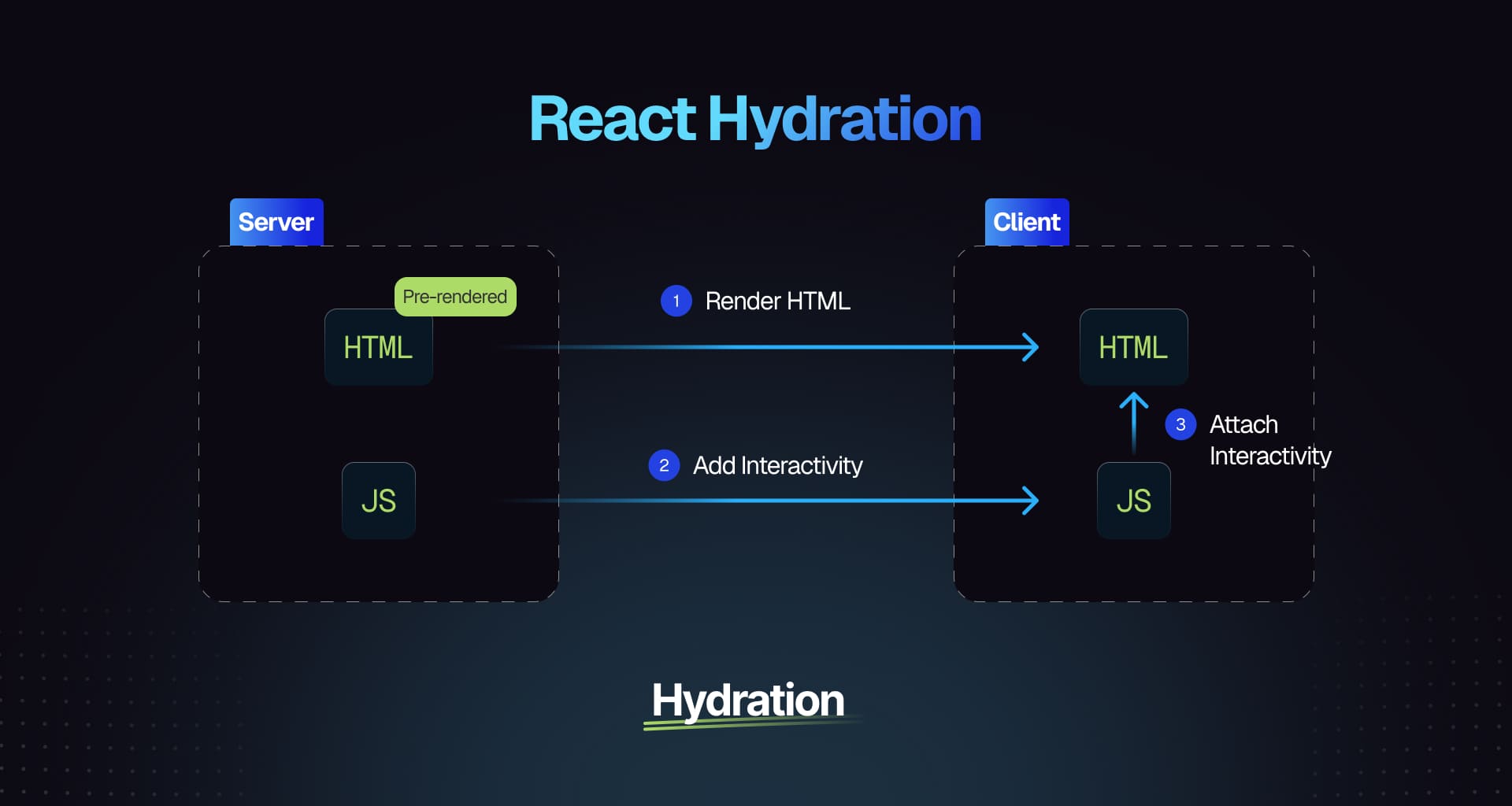

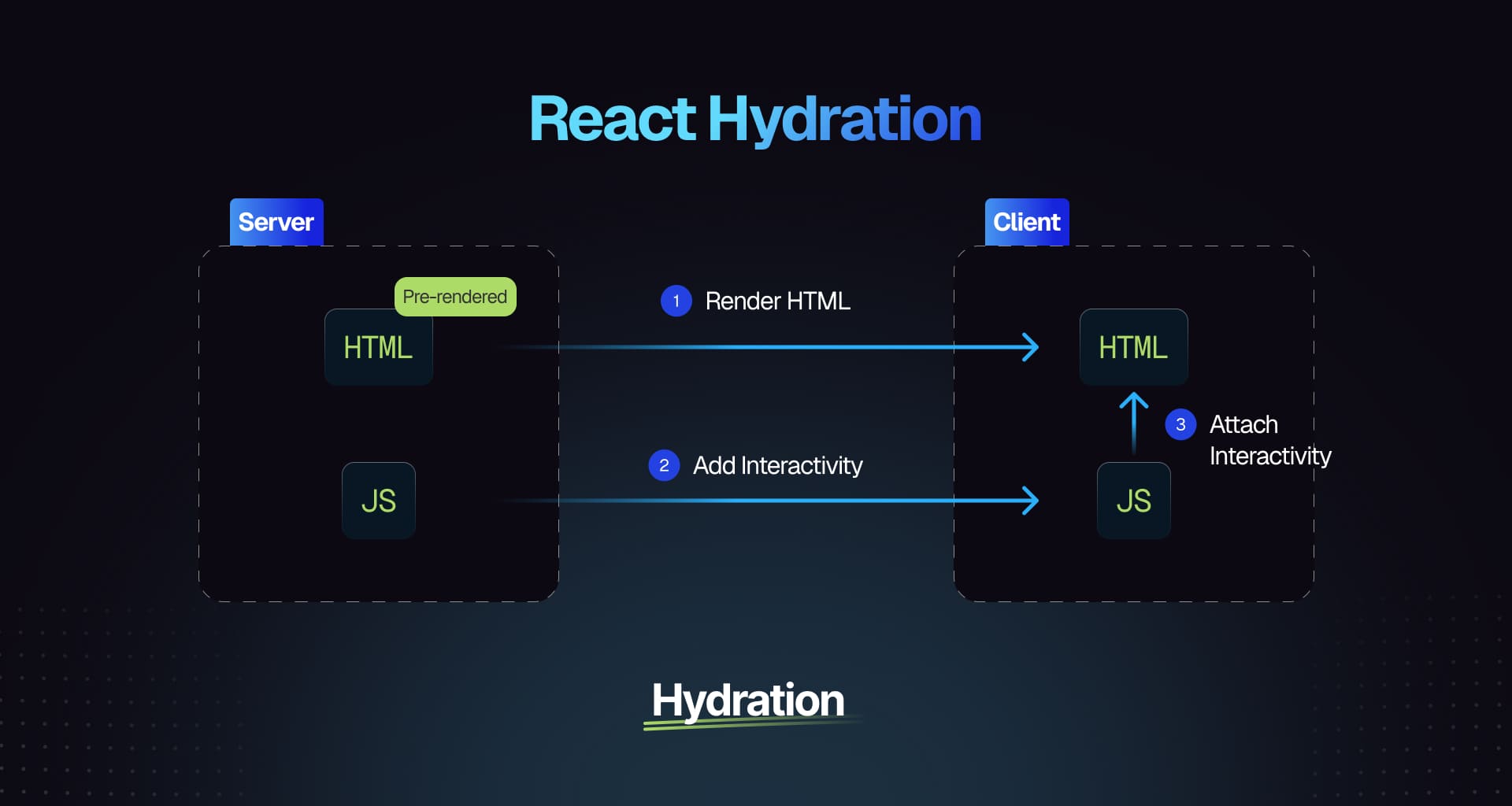

19. What is hydration in React?

Hydration is the process by which React attaches event listeners and updates the DOM to match the virtual DOM on the client side. Hydration is necessary for server-side rendered React applications to ensure that the client-side rendered content is interactive and matches the server-rendered content. During hydration, React reconciles the server-rendered HTML with the client-side virtual DOM and updates the DOM to match the virtual DOM structure.

20. Explain higher-order components (HOCs).

Higher-order components (HOCs) are functions that take a component as an argument and return a new component with enhanced functionality. HOCs are used to share code between components, add additional props or behavior to components, and abstract common logic into reusable functions.

Example:

const withLogger = (WrappedComponent) => {return (props) => {console.log('Component rendered:', WrappedComponent.name);return <WrappedComponent {...props} />;};};const EnhancedComponent = withLogger(MyComponent);In this example, the

withLoggerHOC logs the name of the component every time it renders. TheEnhancedComponentis a new component that includes the logging functionality.21. What are some common performance optimization techniques in React?

Some common performance optimization techniques in React include:

-

Memoization: Use memoization techniques like

useMemoanduseCallbackto cache expensive computations and prevent unnecessary re-renders. -

Code Splitting: Split your code into smaller chunks and load them dynamically to reduce the initial bundle size and improve loading times.

-

Lazy Loading: Use lazy loading to load components or resources only when they are needed, reducing the initial load time of your application.

-

Virtualization: Implement virtualization techniques like windowing or infinite scrolling to render only the visible elements in long lists or tables, improving performance.

-

Server-Side Rendering: Use server-side rendering to pre-render your React components on the server and send the HTML to the client, reducing the time to first paint.

22. What is the purpose of the

useReducerhook in React?The

useReducerhook in React is used to manage complex state logic in functional components. It is an alternative touseStateand allows you to update state based on the previous state and an action.useReduceris useful for managing state transitions that depend on the current state and require more complex logic than simple updates.Example:

const initialState = { count: 0 };const reducer = (state, action) => {switch (action.type) {case 'increment':return { count: state.count + 1 };case 'decrement':return { count: state.count - 1 };default:return state;}};const Counter = () => {const [state, dispatch] = useReducer(reducer, initialState);return (<div>Count: {state.count}<button onClick={() => dispatch({ type: 'increment' })}>Increment</button><button onClick={() => dispatch({ type: 'decrement' })}>Decrement</button></div>);};In this example, the

useReducerhook is used to manage the state of a counter component based on different actions.23. How do you test a React application?

Testing React applications can be done using Jest and React Testing Library. Jest serves as the testing framework while React Testing Library provides utilities for testing components similarly to user interactions.

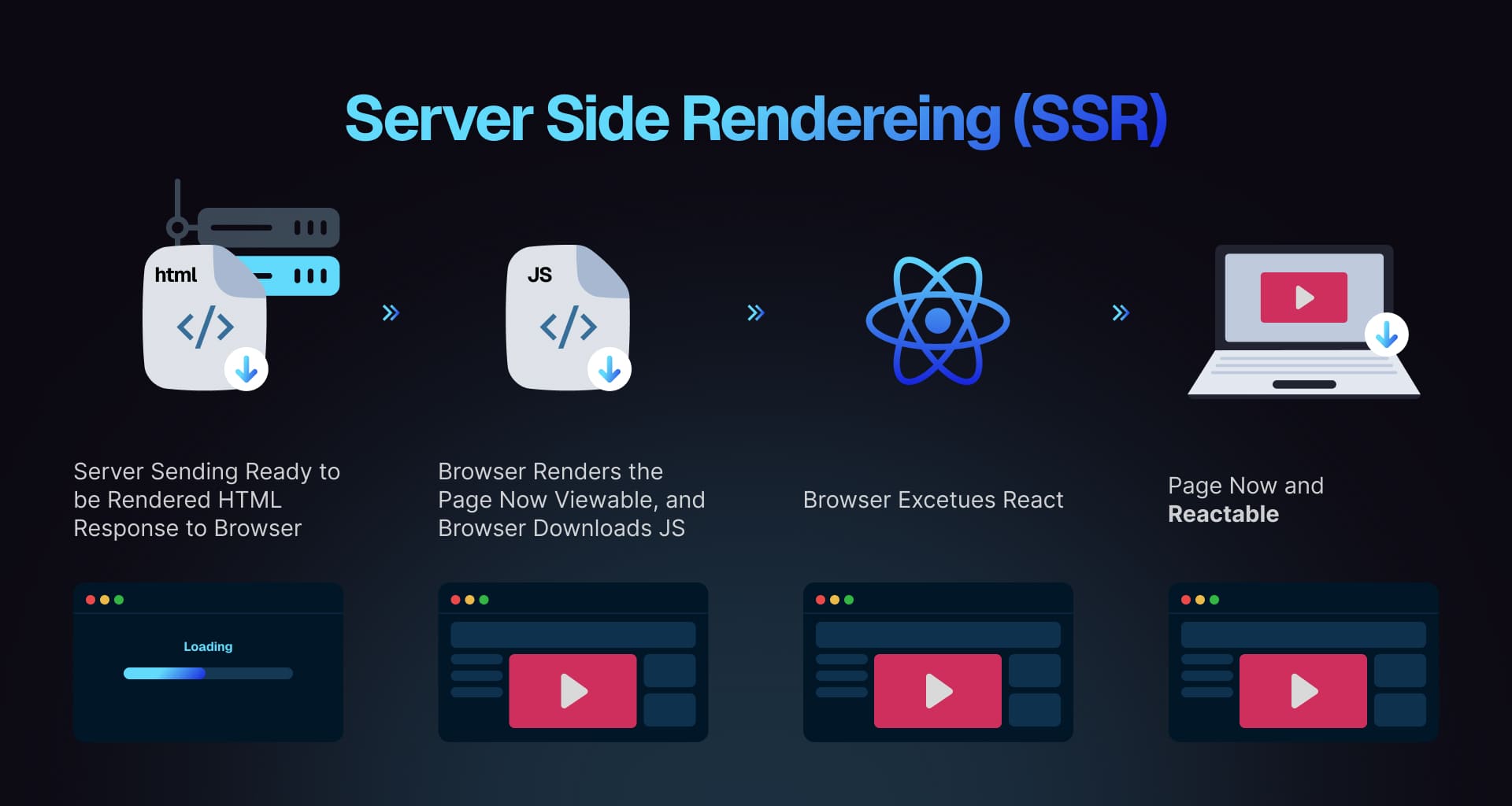

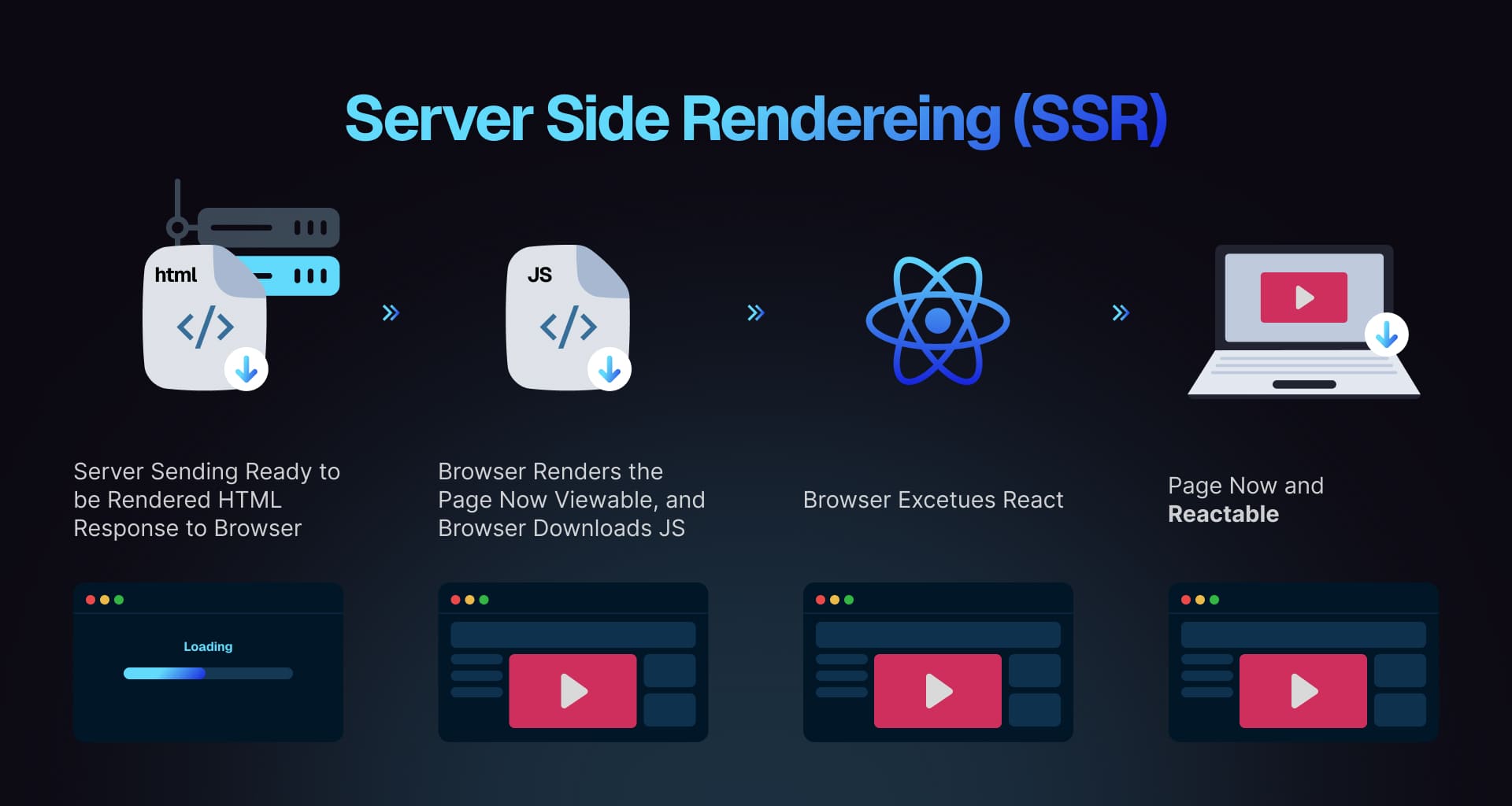

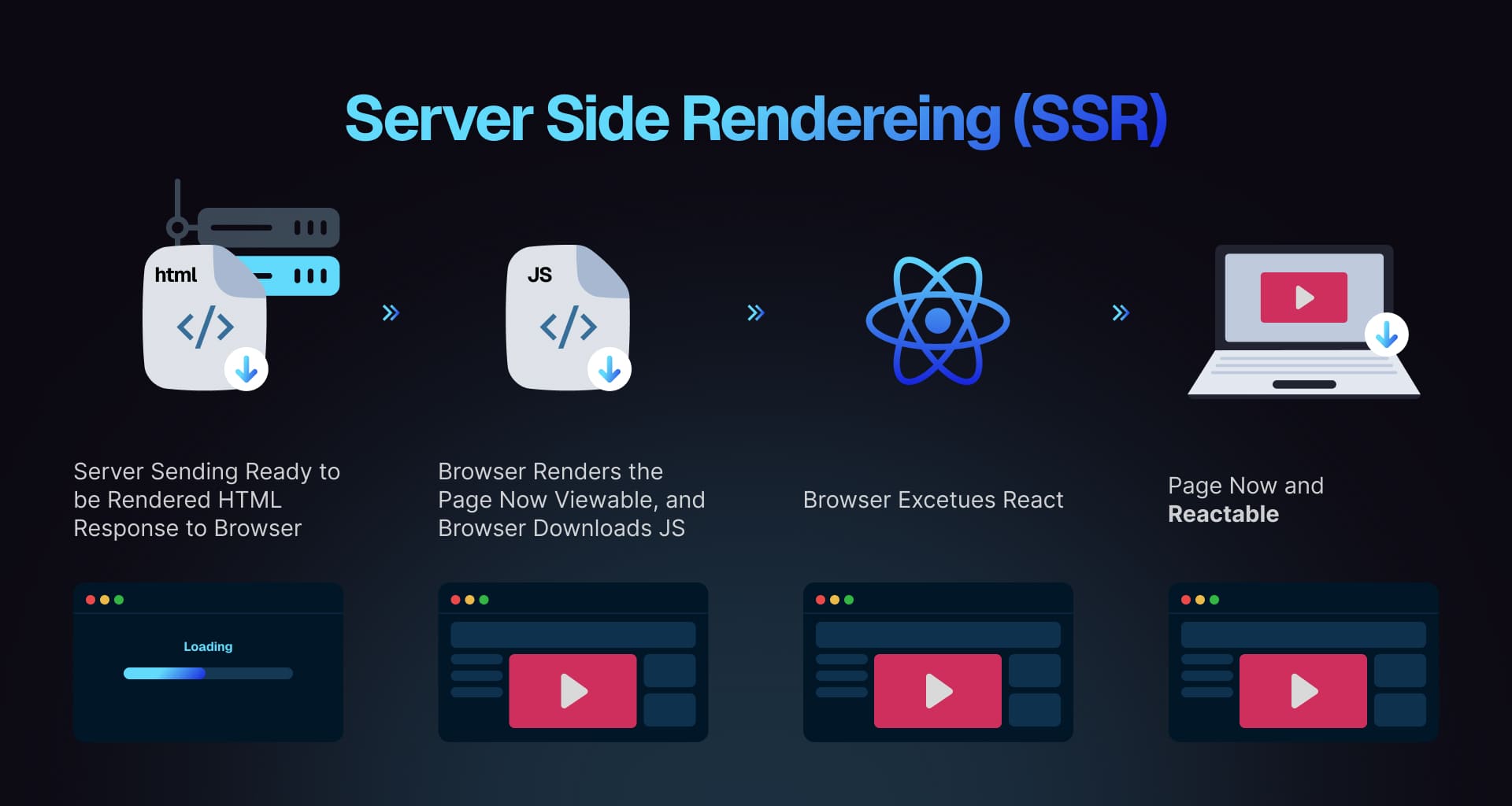

24. What is server-side rendering (SSR)?

Server-side rendering (SSR) is a technique used to pre-render React components on the server and send the HTML to the client. SSR improves the performance of React applications by reducing the time to first paint and making the content accessible to search engines and users with slow internet connections.

25. What is Static site generation (SSG)?

Static site generation (SSG) is a technique used to pre-render static HTML pages at build time. SSG generates static HTML files for each page of a website, which can be served directly to users without the need for server-side rendering. SSG improves performance, reduces server load, and simplifies hosting and deployment.

26. What is lazy loading in React?

Lazy loading is a technique used to load components or resources only when they are needed. Lazy loading helps reduce the initial load time of your application by deferring the loading of non-essential components until they are required. React provides a

React.lazyfunction andSuspensecomponent to implement lazy loading in your application.27. What is the purpose of the

useContexthook in React?The

useContexthook in React is used to access the value of a context provider in a functional component. It allows you to consume context values without using a consumer component.useContextis useful for accessing global data or settings in your application without passing props down the component tree.Example:

const ThemeContext = React.createContext('light');const ThemeProvider = ({ children }) => {return <ThemeContext.Provider value="dark">{children}</ThemeContext.Provider>;};const ThemeConsumer = () => {const theme = React.useContext(ThemeContext);return <div>Theme: {theme}</div>;};In this example, the

ThemeConsumercomponent uses theuseContexthook to access the value of theThemeContextprovider.28. What is the purpose of the

useMemohook in React?The

useMemohook in React is used to memoize expensive computations and cache the result to prevent unnecessary re-renders.useMemotakes a function and an array of dependencies and returns the memoized value. The memoized value is recalculated only when the dependencies change.Example:

const memoizedValue = useMemo(() => computeExpensiveValue(a, b), [a, b]);In this example, the

computeExpensiveValuefunction is memoized, and the result is cached until theaorbdependencies change.29. What is the purpose of the

useCallbackhook in React?The

useCallbackhook in React is used to memoize callback functions and prevent unnecessary re-renders of components that depend on those callbacks.useCallbacktakes a function and an array of dependencies and returns a memoized version of the function. The memoized function is only recalculated when the dependencies change.Example:

const memoizedCallback = useCallback(() => {doSomething(a, b);}, [a, b]);In this example, the

doSomethingfunction is memoized, and the memoized callback is cached until theaorbdependencies change.30. What is the purpose of the

useImperativeHandlehook in React?The

useImperativeHandlehook in React is used to customize the instance value that is exposed to parent components when usingReact.forwardRef. It allows you to define which properties or methods of a child component's instance should be accessible to parent components when using a ref.Example:

const ChildComponent = React.forwardRef((props, ref) => {const inputRef = useRef(null);useImperativeHandle(ref, () => ({focus: () => {inputRef.current.focus();},}));return <input ref={inputRef} />;});const ParentComponent = () => {const childRef = useRef(null);const handleClick = () => {childRef.current.focus();};return (<><ChildComponent ref={childRef} /><button onClick={handleClick}>Focus Input</button></>);};In this example, the

useImperativeHandlehook is used to expose thefocusmethod of theinputRefto the parent component when using a ref. -

- 50 Must-know HTML, CSS and JavaScript Interview Questions by Ex-interviewersDiscover fundamental HTML, CSS, and JavaScript knowledge with these expert-crafted interview questions and answers. Perfect for freshers preparing for junior developer roles.AuthorGreatFrontEnd Team43 min readJan 28, 2025

HTML, CSS, and JavaScript are fundamental skills for any aspiring web developer, and securing a job in this field can be a challenging endeavor, especially for beginners. A critical part of the interview process is the technical interview, where your proficiency in these core web technologies is thoroughly assessed. To help you prepare and boost your confidence, we’ve compiled a list of the top 50 essential interview questions and answers covering HTML, CSS, and JavaScript that are frequently asked in interviews.

1. What Is Hoisting in JavaScript?

Hoisting refers to JavaScript's behavior of moving variable and function declarations to the top of their scope during the compilation phase. While declarations are hoisted, initializations are not.

Example with

var:console.log(foo); // undefinedvar foo = 1;console.log(foo); // 1Visualized as:

var foo;console.log(foo); // undefinedfoo = 1;console.log(foo); // 1Variables Declared with

let,const, andclassThese are hoisted but remain uninitialized, leading to a

ReferenceErrorif accessed before declaration.console.log(bar); // ReferenceErrorlet bar = 'value';Function Declarations vs. Expressions

Function declarations are fully hoisted (both declaration and definition), while function expressions are only partially hoisted (declaration without initialization).

console.log(declared()); // Worksfunction declared() {return 'Declared function';}console.log(expr); // undefinedconsole.log(expr()); // TypeError: expr is not a functionvar expr = function () {return 'Function expression';};Imports

Import statements are hoisted, making imported modules available throughout the file.

import foo from './foo';foo.doSomething(); // AccessibleExplore the concept of "hoisting" in JavaScript on GreatFrontEnd

2. How Do

let,var, andconstDiffer?1. Scope:

var: Function-scoped or globally scoped.letandconst: Block-scoped, confined to their nearest enclosing block.

function test() {var a = 1;let b = 2;const c = 3;}console.log(a); // ReferenceErrorconsole.log(b); // ReferenceErrorconsole.log(c); // ReferenceError2. Initialization:

varandlet: Can be declared without initialization.const: Must be initialized during declaration.

var a;let b;const c; // SyntaxError: Missing initializer3. Redeclaration:

var: Allows redeclaration in the same scope.letandconst: Redeclaration is not allowed.

var x = 1;var x = 2; // Validlet y = 1;let y = 2; // SyntaxError4. Reassignment:

varandlet: Reassignment is allowed.const: Reassignment is not allowed.

const z = 1;z = 2; // TypeError5. Hoisting:

var: Hoisted and initialized toundefined.letandconst: Hoisted but not initialized, causing aReferenceErrorif accessed before declaration.

console.log(a); // undefinedvar a = 1;console.log(b); // ReferenceErrorlet b = 2;Explore the differences between

let,var, andconston GreatFrontEnd3. What Is the Difference Between

==and===?Equality Operator (

==):- Converts operands to a common type before comparison.

- May produce unexpected results due to type coercion.

42 == '42'; // true0 == false; // truenull == undefined; // trueStrict Equality Operator (

===):- No type conversion; checks both value and type.

- Ensures accurate comparisons.

42 === '42'; // false0 === false; // falsenull === undefined; // falseBest Practice:

Prefer

===to avoid unexpected behavior caused by type coercion, except when comparing againstnullorundefined.var value = null;console.log(value == null); // trueconsole.log(value === null); // trueExplore the difference between

==and===on GreatFrontEnd4. What Is the Event Loop in JavaScript?

The event loop allows JavaScript to handle asynchronous tasks on a single thread, ensuring smooth execution without blocking.

Components:

- Call Stack: Tracks function calls in a LIFO order.

- Web APIs: Handle asynchronous tasks like timers and HTTP requests.

- Task Queue: Stores tasks like

setTimeoutand UI events. - Microtask Queue: Handles high-priority tasks like

Promisecallbacks.

Execution Order:

- Synchronous code executes first (call stack).

- Microtasks are processed next.

- Macrotasks are executed afterward.

Example:

console.log('Start');setTimeout(() => console.log('Timeout'), 0);Promise.resolve().then(() => console.log('Promise'));console.log('End');Output:

StartEndPromiseTimeoutExplore the event loop in JavaScript on GreatFrontEnd

5. What Is Event Delegation?

Event delegation uses a single event listener on a parent element to manage events on its child elements. This approach takes advantage of event bubbling, improving efficiency.

Benefits:

- Reduces memory usage by limiting the number of listeners.

- Dynamically handles added or removed child elements.

Example:

document.getElementById('parent').addEventListener('click', (event) => {if (event.target.tagName === 'BUTTON') {console.log(`Clicked ${event.target.textContent}`);}});Explore event delegation in JavaScript on GreatFrontEnd

6. How Does

thisWork in JavaScript?The value of

thisdepends on how a function is invoked:Rules:

- Default Binding: Refers to the global object (

windowin browsers). - Implicit Binding: Refers to the object before the dot.

- Explicit Binding: Defined using

call,apply, orbind. - Arrow Functions: Lexically inherit

thisfrom the surrounding scope.

Example:

const obj = {name: 'Alice',greet() {console.log(this.name);},};obj.greet(); // AliceExplore how

thisworks in JavaScript on GreatFrontEnd7. How Do Cookies,

localStorage, andsessionStorageDiffer?Cookies:

- Sent with every HTTP request.

- Limited to 4KB per domain.

- Can be set to expire.

document.cookie = 'token=abc123; expires=Fri, 31 Dec 2025 23:59:59 GMT; path=/';console.log(document.cookie);localStorage:- Persistent storage (until manually cleared).

- 5MB limit per origin.

localStorage.setItem('key', 'value');console.log(localStorage.getItem('key'));sessionStorage:- Data cleared when the tab or browser is closed.

- Limited to 5MB.

sessionStorage.setItem('key', 'value');console.log(sessionStorage.getItem('key'));Explore the difference between cookies,

localStorage, andsessionStorageon GreatFrontEnd8. What Are

<script>,<script async>, and<script defer>?<script>:- Blocks HTML parsing until the script loads and executes.

<script async>:- Loads scripts asynchronously.

- Executes as soon as the script is ready, potentially before HTML parsing completes.

<script defer>:- Loads scripts asynchronously.

- Executes only after the HTML parsing is complete.

<script src="main.js"></script><script async src="async.js"></script><script defer src="defer.js"></script>Explore the difference between

<script>,<script async>, and<script defer>on GreatFrontEnd9. How Do

null,undefined, and Undeclared Variables Differ?null:Explicitly represents no value. Use

===to check.undefined:Indicates a variable has been declared but not assigned a value.

Undeclared:

Variables not declared will throw a

ReferenceError.let a;console.log(a); // undefinedlet b = null;console.log(b); // nullExplore the difference between

null,undefined, and undeclared variables on GreatFrontEnd10. What Is the Difference Between

.calland.apply?.call:Accepts arguments as a comma-separated list.

.apply:Accepts arguments as an array.

function sum(a, b) {return a + b;}console.log(sum.call(null, 1, 2)); // 3console.log(sum.apply(null, [1, 2])); // 3Explore the difference between

.calland.applyon GreatFrontEnd11. What Is

Function.prototype.bindand Why Is It Useful?The

Function.prototype.bindmethod allows you to create a new function with a specificthiscontext and optional preset arguments. It’s particularly useful for ensuring a function has the correctthiscontext when passed to another function or used as a callback.Example:

const john = {age: 42,getAge: function () {return this.age;},};console.log(john.getAge()); // 42const unboundGetAge = john.getAge;console.log(unboundGetAge()); // undefinedconst boundGetAge = john.getAge.bind(john);console.log(boundGetAge()); // 42const mary = { age: 21 };const boundGetAgeMary = john.getAge.bind(mary);console.log(boundGetAgeMary()); // 21Common Uses:

- Binding

this:bindis often used to fix thethisvalue for a method, ensuring it always refers to the intended object. - Partial Application: You can predefine some arguments for a function using

bind. - Method Borrowing:

bindallows methods from one object to be used on another object.

Explore

Function.prototype.bindon GreatFrontEnd12. Why Use Arrow Functions in Constructors?

Arrow functions automatically bind the

thisvalue to the surrounding lexical scope, which eliminates issues with context in methods. This behavior makes code more predictable and easier to debug.Example:

const Person = function (name) {this.name = name;this.sayName1 = function () {console.log(this.name);};this.sayName2 = () => {console.log(this.name);};};const john = new Person('John');const dave = new Person('Dave');john.sayName1(); // Johnjohn.sayName2(); // Johnjohn.sayName1.call(dave); // Davejohn.sayName2.call(dave); // JohnWhen to Use:

- In scenarios like React class components, where methods are passed as props and need to retain their original

thiscontext.

Explore the advantage of using the arrow syntax for a method in a constructor on GreatFrontEnd

13. How Does Prototypal Inheritance Work?

Prototypal inheritance allows objects to inherit properties and methods from other objects through the prototype chain.

Key Concepts:

1. Prototypes:

Every JavaScript object has a prototype, which is another object from which it inherits properties.

function Person(name, age) {this.name = name;this.age = age;}Person.prototype.sayHello = function () {console.log(`Hello, my name is ${this.name} and I am ${this.age} years old.`);};const john = new Person('John', 30);john.sayHello(); // Hello, my name is John and I am 30 years old.2. Prototype Chain:

JavaScript looks for properties and methods on the object and continues up the chain until it finds the property or reaches

null.3. Constructor Functions:

Used with

newto create objects and set their prototype.function Animal(name) {this.name = name;}Animal.prototype.sayName = function () {console.log(`My name is ${this.name}`);};function Dog(name, breed) {Animal.call(this, name);this.breed = breed;}Dog.prototype = Object.create(Animal.prototype);Dog.prototype.bark = function () {console.log('Woof!');};const fido = new Dog('Fido', 'Labrador');fido.sayName(); // My name is Fidofido.bark(); // Woof!Explore how prototypal inheritance works on GreatFrontEnd

14. What’s the Difference Between

function Person(){},const person = Person(), andconst person = new Person()?Function Declaration:

function Person() {}is a standard function declaration. When written in PascalCase, it conventionally represents a constructor function.Function Call:

const person = Person()calls the function and executes its code but does not create a new object.Constructor Call:

const person = new Person()creates a new object, setting its prototype toPerson.prototype.15. How Do Function Declarations and Expressions Differ?

Function Declarations:

function foo() {console.log('Function declaration');}- Hoisted with their body.

- Can be invoked before their definition.

Function Expressions:

const foo = function () {console.log('Function expression');};- Only the variable is hoisted, not the function body.

- Cannot be invoked before their definition.

Explore the differences between function declarations and expressions on GreatFrontEnd

16. How Can You Create Objects in JavaScript?

- Object Literals:

const person = { firstName: 'John', lastName: 'Doe' };Object()Constructor:

const person = new Object();person.firstName = 'John';person.lastName = 'Doe';Object.create():

const proto = {greet() {console.log('Hello!');},};const person = Object.create(proto);person.greet(); // Hello!- ES2015 Classes:

class Person {constructor(name, age) {this.name = name;this.age = age;}}Explore ways to create objects in JavaScript on GreatFrontEnd

17. What Are Higher-Order Functions?

Higher-order functions either:

- Take other functions as arguments.

- Return functions.

Example:

function multiplier(factor) {return function (number) {return number * factor;};}const double = multiplier(2);console.log(double(5)); // 10Explore higher-order functions on GreatFrontEnd

18. Differences Between ES2015 Classes and ES5 Constructors

ES5 Constructor:

function Person(name) {this.name = name;}Person.prototype.greet = function () {console.log(`Hello, I’m ${this.name}`);};ES2015 Class:

class Person {constructor(name) {this.name = name;}greet() {console.log(`Hello, I’m ${this.name}`);}}Key Differences:

- Syntax: Classes are easier to read and write.

- Inheritance: Classes use

extendsandsuper.

Explore ES2015 classes and ES5 constructors on GreatFrontEnd

19. What Is Event Bubbling?

Event bubbling is when an event starts at the target element and propagates up through its ancestors.

Example:

parent.addEventListener('click', () => console.log('Parent clicked'));child.addEventListener('click', () => console.log('Child clicked'));Clicking the child triggers both handlers.

Explore event bubbling on GreatFrontEnd

20. What Is Event Capturing?

Event capturing is when an event starts at the root and propagates down to the target element.

Enabling Capturing:

parent.addEventListener('click', () => console.log('Parent capturing'), true);Explore event capturing on GreatFrontEnd

21. How Do the

mouseenterandmouseoverEvents Differ in JavaScript and Browsers?mouseenter- Does not propagate through the DOM tree

- Fires solely when the cursor enters the element itself, excluding its child elements

- Triggers only once upon entering the parent element, regardless of its internal content

mouseover- Propagates upwards through the DOM hierarchy

- Activates when the cursor enters the element or any of its descendant elements

- May lead to multiple event callbacks if there are nested child elements

22. Can You Differentiate Between Synchronous and Asynchronous Functions?

Synchronous Functions

- Execute operations in a sequential, step-by-step manner

- Block the program's execution until the current task completes

- Adhere to a strict, line-by-line execution order

- Are generally easier to comprehend and debug due to their predictable flow

- Common use cases include reading files synchronously and iterating over large datasets

Example:

const fs = require('fs');const data = fs.readFileSync('large-file.txt', 'utf8');console.log(data); // Blocks until file is readconsole.log('End of the program');Asynchronous Functions

- Allow the program to continue running without waiting for the task to finish

- Enable other operations to proceed while waiting for responses or the completion of time-consuming tasks

- Are non-blocking, facilitating concurrent execution and enhancing performance and responsiveness

- Commonly used for network requests, file I/O, timers, and animations

Example:

console.log('Start of the program');fetch('https://api.example.com/data').then((response) => response.json()).then((data) => console.log(data)) // Non-blocking.catch((error) => console.error(error));console.log('End of program');Understand the distinctions between synchronous and asynchronous functions on GreatFrontEnd

23. Provide a Comprehensive Explanation of AJAX

AJAX (Asynchronous JavaScript and XML) encompasses a collection of web development techniques that utilize various client-side technologies to build asynchronous web applications. Unlike traditional web applications where every user interaction results in a complete page reload, AJAX enables web apps to send and retrieve data from a server asynchronously. This allows for dynamic updates to specific parts of a web page without disrupting the overall page display and behavior.

Key Highlights:

- Asynchronous Operations: AJAX allows parts of a web page to update independently without reloading the entire page.

- Data Formats: Initially utilized XML, but JSON has become more prevalent due to its seamless compatibility with JavaScript.

- APIs: Traditionally relied on

XMLHttpRequest, thoughfetch()is now the preferred choice for modern web development.

XMLHttpRequestAPIExample:

let xhr = new XMLHttpRequest();xhr.onreadystatechange = function () {if (xhr.readyState === XMLHttpRequest.DONE) {if (xhr.status === 200) {console.log(xhr.responseText);} else {console.error('Request failed: ' + xhr.status);}}};xhr.open('GET', 'https://jsonplaceholder.typicode.com/todos/1', true);xhr.send();- Process: Initiates a new

XMLHttpRequest, assigns a callback to handle state changes, opens a connection to a specified URL, and sends the request.

fetch()APIExample:

fetch('https://jsonplaceholder.typicode.com/todos/1').then((response) => {if (!response.ok) {throw new Error('Network response was not ok');}return response.json();}).then((data) => console.log(data)).catch((error) => console.error('Fetch error:', error));- Process: Starts a fetch request, processes the response with

.then()to parse JSON data, and handles errors using.catch().

How AJAX Operates with

fetch1. Initiating a Request

-

fetch()starts an asynchronous request to obtain a resource from a given URL. -

Example:

fetch('https://api.example.com/data', {method: 'GET', // or 'POST', 'PUT', 'DELETE', etc.headers: {'Content-Type': 'application/json',},});

2. Promise-Based Response

fetch()returns a Promise that resolves to aResponseobject representing the server's reply.

3. Managing the Response

-

The

Responseobject provides methods to handle the content, such as.json(),.text(), and.blob(). -

Example:

fetch('https://api.example.com/data').then((response) => response.json()).then((data) => console.log(data)).catch((error) => console.error('Error:', error));

4. Asynchronous Nature

fetch()operates asynchronously, allowing the browser to perform other tasks while awaiting the server's response.- Promises (

.then(),.catch()) are processed in the microtask queue as part of the event loop.

5. Configuring Request Options

- The optional second parameter in

fetch()allows configuration of various request settings, including HTTP method, headers, body, credentials, and caching behavior.

6. Handling Errors

- Errors such as network failures or invalid responses are captured and managed through the Promise chain using

.catch()ortry/catchwithasync/await.

Learn how to explain AJAX in detail on GreatFrontEnd

24. What Are the Pros and Cons of Utilizing AJAX?

AJAX (Asynchronous JavaScript and XML) facilitates the asynchronous exchange of data between web pages and servers, enabling dynamic content updates without necessitating full page reloads.

Advantages

- Enhanced User Experience: Updates content seamlessly without refreshing the entire page.

- Improved Performance: Reduces server load by fetching only the required data.

- Maintains State: Preserves user interactions and client-side states within the page.

Disadvantages

- Dependency on JavaScript: Functionality can break if JavaScript is disabled in the browser.

- Bookmarking Issues: Dynamic content updates make it difficult to bookmark specific states of a page.

- SEO Challenges: Search engines may find it hard to index dynamically loaded content effectively.

- Performance on Low-End Devices: Processing AJAX data can be resource-intensive, potentially slowing down performance on less powerful devices.

Explore the benefits and drawbacks of using AJAX on GreatFrontEnd

25. How Do

XMLHttpRequestandfetch()Differ?Both

XMLHttpRequest (XHR)andfetch()facilitate asynchronous HTTP requests in JavaScript, but they vary in syntax, handling mechanisms, and features.Syntax and Implementation

- XMLHttpRequest: Utilizes an event-driven approach, requiring event listeners to manage responses and errors.

- fetch(): Employs a Promise-based model, offering a more straightforward and intuitive syntax.

Setting Request Headers

- XMLHttpRequest: Headers are set using the

setRequestHeadermethod. - fetch(): Headers are provided as an object within the options parameter.

Sending the Request Body

- XMLHttpRequest: The request body is sent using the

sendmethod. - fetch(): The

bodyproperty within the options parameter is used to include the request body.

Handling Responses

- XMLHttpRequest: Uses the

responseTypeproperty to manage different response formats. - fetch(): Offers a unified

Responseobject with.thenmethods for accessing data.

Managing Errors

- XMLHttpRequest: Errors are handled via the

onerrorevent. - fetch(): Errors are managed using the

.catchmethod.

Controlling Caching

- XMLHttpRequest: Managing cache can be cumbersome and often requires workaround strategies.

- fetch(): Directly supports caching options through its configuration.

Canceling Requests

- XMLHttpRequest: Requests can be aborted using the

abort()method. - fetch(): Utilizes

AbortControllerfor canceling requests.

Tracking Progress

- XMLHttpRequest: Supports progress tracking with the

onprogressevent. - fetch(): Lacks native support for tracking progress.

Choosing Between Them:

fetch()is generally favored for its cleaner syntax and Promise-based handling, thoughXMLHttpRequestremains useful for specific scenarios like progress tracking.Discover the distinctions between

XMLHttpRequestandfetch()on GreatFrontEnd26. What Are the Different Data Types in JavaScript?

JavaScript encompasses a variety of data types, which are categorized into two main groups: primitive and non-primitive (reference) types.

Primitive Data Types

- Number: Represents both integer and floating-point numbers.

- String: Denotes sequences of characters, enclosed in single quotes, double quotes, or backticks.

- Boolean: Logical values with

trueorfalse. - Undefined: A variable that has been declared but not assigned a value.

- Null: Signifies the intentional absence of any object value.

- Symbol: A unique and immutable value used primarily as object property keys.

- BigInt: Allows representation of integers with arbitrary precision, useful for very large numbers.

Non-Primitive Data Types

- Object: Stores collections of data and more complex entities.

- Array: An ordered list of values.

- Function: Functions are treated as objects and can be defined using declarations or expressions.

- Date: Represents dates and times.

- RegExp: Used for defining regular expressions for pattern matching within strings.

- Map: A collection of keyed data items, allowing keys of any type.

- Set: A collection of unique values.

Identifying Data Types: JavaScript is dynamically typed, meaning variables can hold different types of data at various times. The

typeofoperator is used to determine a variable's type.Explore the variety of data types in JavaScript on GreatFrontEnd

27. What Constructs Do You Use to Iterate Over Object Properties and Array Elements?

Looping through object properties and array items is a fundamental task in JavaScript, and there are multiple methods to accomplish this. Below are some of the common approaches:

Iterating Over Objects

1.

for...inLoopIterates over all enumerable properties of an object, including inherited ones.

for (const property in obj) {if (Object.hasOwn(obj, property)) {console.log(property);}}2.

Object.keys()Returns an array containing the object's own enumerable property names.

Object.keys(obj).forEach((property) => console.log(property));3.

Object.entries()Provides an array of the object's own enumerable string-keyed

[key, value]pairs.Object.entries(obj).forEach(([key, value]) => console.log(`${key}: ${value}`));4.

Object.getOwnPropertyNames()Returns an array of all properties (including non-enumerable ones) directly found on the object.

Object.getOwnPropertyNames(obj).forEach((property) => console.log(property));Iterating Over Arrays

1.

forLoopA traditional loop for iterating over array elements.

for (let i = 0; i < arr.length; i++) {console.log(arr[i]);}2.

Array.prototype.forEach()Executes a provided function once for each array element.

arr.forEach((element, index) => console.log(element, index));3.

for...ofLoopIterates over iterable objects like arrays.

for (let element of arr) {console.log(element);}4.

Array.prototype.entries()Provides both the index and value of each array element within a

for...ofloop.for (let [index, elem] of arr.entries()) {console.log(index, ': ', elem);}28. What Are the Advantages of Using Spread Syntax, and How Does It Differ from Rest Syntax?

Spread Syntax

Introduced in ES2015, the spread syntax (

...) is a powerful feature for copying and merging arrays and objects without altering the originals. It's widely used in functional programming, Redux, and RxJS.-

Cloning Arrays/Objects: Creates shallow copies.

const array = [1, 2, 3];const newArray = [...array]; // [1, 2, 3]const obj = { name: 'John', age: 30 };const newObj = { ...obj, city: 'New York' }; // { name: 'John', age: 30, city: 'New York' } -

Combining Arrays/Objects: Merges them into a new entity.

const arr1 = [1, 2, 3];const arr2 = [4, 5, 6];const mergedArray = [...arr1, ...arr2]; // [1, 2, 3, 4, 5, 6]const obj1 = { foo: 'bar' };const obj2 = { qux: 'baz' };const mergedObj = { ...obj1, ...obj2 }; // { foo: 'bar', qux: 'baz' } -

Passing Function Arguments: Spreads array elements as individual arguments.

const numbers = [1, 2, 3];Math.max(...numbers); // Equivalent to Math.max(1, 2, 3) -

Array vs. Object Spreads: Only iterables can be spread into arrays, while arrays can also be spread into objects.

const array = [1, 2, 3];const obj = { ...array }; // { 0: 1, 1: 2, 2: 3 }

Rest Syntax

The rest syntax (

...) collects multiple elements into an array or object, functioning as the opposite of spread syntax.-

Function Parameters: Gathers remaining arguments into an array.

function addFiveToNumbers(...numbers) {return numbers.map((x) => x + 5);}const result = addFiveToNumbers(4, 5, 6, 7); // [9, 10, 11, 12] -

Array Destructuring: Collects remaining elements into a new array.

const [first, second, ...remaining] = [1, 2, 3, 4, 5];// first: 1, second: 2, remaining: [3, 4, 5] -

Object Destructuring: Gathers remaining properties into a new object.

const { e, f, ...others } = { e: 1, f: 2, g: 3, h: 4 };// e: 1, f: 2, others: { g: 3, h: 4 } -

Rest Parameter Rules: Must be the final parameter in a function.

function addFiveToNumbers(arg1, ...numbers, arg2) {// Error: Rest element must be last element.}

Understand the benefits of spread syntax and how it differs from rest syntax on GreatFrontEnd

29. How Does a

MapObject Differ from a Plain Object in JavaScript?Map Object

- Key Flexibility: Allows keys of any type, including objects, functions, and primitives.

- Order Preservation: Maintains the order in which keys are inserted.

- Size Property: Includes a

sizeproperty to easily determine the number of key-value pairs. - Iteration: Directly iterable with methods like

forEach,keys(),values(), andentries(). - Performance: Typically offers better performance for larger datasets and frequent modifications.

Plain Object

- Key Types: Primarily uses strings or symbols as keys. Non-string keys are converted to strings.

- Order: Does not guarantee the order of key insertion.

- Size Tracking: Lacks a built-in property to determine the number of keys; requires manual counting.

- Iteration: Not inherently iterable. Requires methods like

Object.keys(),Object.values(), orObject.entries()to iterate. - Performance: Generally faster for small datasets and simple operations.

Example

// Mapconst map = new Map();map.set('key1', 'value1');map.set({ key: 'key2' }, 'value2');console.log(map.size); // 2// Plain Objectconst obj = { key1: 'value1' };obj[{ key: 'key2' }] = 'value2';console.log(Object.keys(obj).length); // 1 (keys are strings)Discover the differences between a

Mapobject and a plain object in JavaScript on GreatFrontEnd30. What Are the Differences Between

Map/SetandWeakMap/WeakSet?The primary distinctions between

Map/SetandWeakMap/WeakSetin JavaScript are outlined below:-

Key Types:

MapandSetaccept keys of any type, including objects, primitives, and functions.WeakMapandWeakSetexclusively use objects as keys, disallowing primitive values like strings or numbers.

-

Memory Management:

MapandSetmaintain strong references to their keys and values, preventing their garbage collection.WeakMapandWeakSetuse weak references for keys (objects), allowing garbage collection if there are no other strong references.

-

Key Enumeration:

MapandSethave enumerable keys that can be iterated over.WeakMapandWeakSetdo not allow enumeration of keys, making it impossible to retrieve lists of keys or values directly.

-

Size Property:

MapandSetprovide asizeproperty indicating the number of elements.WeakMapandWeakSetlack asizeproperty since their size can change due to garbage collection.

-

Use Cases:

MapandSetare suitable for general-purpose data storage and caching.WeakMapandWeakSetare ideal for storing metadata or additional object-related information without preventing the objects from being garbage collected when they are no longer needed.

Learn about the differences between

Map/SetandWeakMap/WeakSeton GreatFrontEnd31. What is a Practical Scenario for Using the Arrow

=>Function Syntax?One effective application of JavaScript's arrow function syntax is streamlining callback functions, especially when concise, inline function definitions are needed. Consider the following example:

Scenario: Doubling Array Elements with

mapImagine you have an array of numbers and you want to double each number using the

mapmethod.// Traditional function syntaxconst numbers = [1, 2, 3, 4, 5];const doubledNumbers = numbers.map(function (number) {return number * 2;});console.log(doubledNumbers); // Output: [2, 4, 6, 8, 10]By utilizing arrow function syntax, the same outcome can be achieved more succinctly:

// Arrow function syntaxconst numbers = [1, 2, 3, 4, 5];const doubledNumbers = numbers.map((number) => number * 2);console.log(doubledNumbers); // Output: [2, 4, 6, 8, 10]Explore a use case for the new arrow => function syntax on GreatFrontEnd

32. How Do Callback Functions Operate in Asynchronous Tasks?

In the realm of asynchronous programming, a callback function is passed as an argument to another function and is executed once a particular task completes, such as data retrieval or handling input/output operations. Here's a straightforward explanation:

Example

function fetchData(callback) {setTimeout(() => {const data = { name: 'John', age: 30 };callback(data);}, 1000);}fetchData((data) => {console.log(data); // { name: 'John', age: 30 }});Explore the concept of a callback function in asynchronous operations on GreatFrontEnd

33. Can You Describe Debouncing and Throttling Techniques?

Debouncing and throttling are techniques used to control the rate at which functions are executed, optimizing performance and managing event-driven behaviors in JavaScript applications.

-

Debouncing: Delays the execution of a function until a specified period has elapsed since its last invocation. This is particularly useful for scenarios like handling search input where you want to wait until the user has finished typing before executing a function.

function debounce(func, delay) {let timeoutId;return (...args) => {clearTimeout(timeoutId);timeoutId = setTimeout(() => func.apply(this, args), delay);};} -

Throttling: Restricts a function to be executed no more than once within a given timeframe. This is beneficial for handling events that fire frequently, such as window resizing or scrolling.

function throttle(func, limit) {let inThrottle;return (...args) => {if (!inThrottle) {func.apply(this, args);inThrottle = true;setTimeout(() => (inThrottle = false), limit);}};}

These strategies help in enhancing application performance by preventing excessive function calls.

Explore the concept of debouncing and throttling on GreatFrontEnd

34. How Does Destructuring Assignment Work for Objects and Arrays?

Destructuring assignment in JavaScript provides a concise way to extract values from arrays or properties from objects into individual variables.

Example

// Array destructuringconst [a, b] = [1, 2];// Object destructuringconst { name, age } = { name: 'John', age: 30 };This syntax employs square brackets for arrays and curly braces for objects, allowing for streamlined variable assignments directly from data structures.

Explore the concept of destructuring assignment for objects and arrays on GreatFrontEnd

35. What is Hoisting in the Context of Functions?

Hoisting in JavaScript refers to the behavior where function declarations are moved to the top of their containing scope during the compilation phase. This allows functions to be invoked before their actual definition in the code. Conversely, function expressions and arrow functions must be defined prior to their invocation to avoid errors.

Example

// Function declarationhoistedFunction(); // Works finefunction hoistedFunction() {console.log('This function is hoisted');}// Function expressionnonHoistedFunction(); // Throws an errorvar nonHoistedFunction = function () {console.log('This function is not hoisted');};Explore the concept of hoisting with regards to functions on GreatFrontEnd

36. How Does Inheritance Work in ES2015 Classes?

In ES2015, JavaScript introduces the

classsyntax with theextendskeyword, enabling one class to inherit properties and methods from another. Thesuperkeyword is used to access the parent class's constructor and methods.Example

class Animal {constructor(name) {this.name = name;}speak() {console.log(`${this.name} makes a noise.`);}}class Dog extends Animal {constructor(name, breed) {super(name);this.breed = breed;}speak() {console.log(`${this.name} barks.`);}}const dog = new Dog('Rex', 'German Shepherd');dog.speak(); // Output: Rex barks.In this example, the

Dogclass inherits from theAnimalclass, demonstrating how classes facilitate inheritance and method overriding in JavaScript.Explore the concept of inheritance in ES2015 classes on GreatFrontEnd

37. What is Lexical Scoping?

Lexical scoping in JavaScript determines how variable names are resolved based on their location within the source code. Nested functions have access to variables from their parent scopes, enabling them to utilize and manipulate these variables.

Example

function outerFunction() {let outerVariable = 'I am outside!';function innerFunction() {console.log(outerVariable); // 'I am outside!'}innerFunction();}outerFunction();In this scenario,

innerFunctioncan accessouterVariablebecause of lexical scoping rules, which allow inner functions to access variables defined in their outer scope.Explore the concept of lexical scoping on GreatFrontEnd

38. What is Scope in JavaScript?

Scope in JavaScript defines the accessibility of variables and functions in different parts of the code. There are three primary types of scope:

- Global Scope: Variables declared outside any function or block are accessible throughout the entire code.

- Function Scope: Variables declared within a function are accessible only within that function.

- Block Scope: Introduced in ES6, variables declared with

letorconstwithin a block (e.g., within{}) are accessible only within that block.

Example

// Global scopevar globalVar = 'I am global';function myFunction() {// Function scopevar functionVar = 'I am in a function';if (true) {// Block scopelet blockVar = 'I am in a block';console.log(blockVar); // Accessible here}// console.log(blockVar); // Throws an error}console.log(globalVar); // Accessible here// console.log(functionVar); // Throws an errorIn this example,

globalVaris accessible globally,functionVaris confined tomyFunction, andblockVaris restricted to theifblock.Explore the concept of scope in JavaScript on GreatFrontEnd

39. What is the Spread Operator and How is it Used?

The spread operator (

...) in JavaScript allows iterable elements (like arrays or objects) to be expanded into individual elements. It's versatile and can be used for copying, merging, and passing array elements as function arguments.Examples

// Copying an arrayconst arr1 = [1, 2, 3];const arr2 = [...arr1];// Merging arraysconst arr3 = [4, 5, 6];const mergedArray = [...arr1, ...arr3];// Copying an objectconst obj1 = { a: 1, b: 2 };const obj2 = { ...obj1 };// Merging objectsconst obj3 = { c: 3, d: 4 };const mergedObject = { ...obj1, ...obj3 };// Passing array elements as function argumentsconst sum = (x, y, z) => x + y + z;const numbers = [1, 2, 3];console.log(sum(...numbers)); // Output: 6The spread operator simplifies operations such as copying arrays or objects, merging multiple arrays or objects into one, and spreading elements of an array as individual arguments to functions.

Explore the concept of the spread operator and its uses on GreatFrontEnd

40. How Does

thisBinding Work in Event Handlers?In JavaScript, the

thiskeyword refers to the object that is executing the current piece of code. Within event handlers,thistypically points to the DOM element that triggered the event. However, its value can change depending on how the handler is defined and invoked. To ensurethisreferences the intended context, techniques like usingbind(), arrow functions, or explicitly setting the context are employed.These methods help maintain the correct reference for

thiswithin event handling functions, ensuring consistent and predictable behavior across various event-driven scenarios in JavaScript applications.Explore the concept of

thisbinding in event handlers on GreatFrontEnd41. What is a Block Formatting Context (BFC) and How Does It Function?

A Block Formatting Context (BFC) is a pivotal concept in CSS that influences how block-level elements are rendered and interact on a webpage. It creates an isolated environment where block boxes are laid out, ensuring that elements like floats, absolutely positioned elements,

inline-blocks,table-cells,table-captions, and those with anoverflowvalue other thanvisible(except when propagated to the viewport) establish a new BFC.Grasping how to initiate a BFC is essential because, without it, the containing box might fail to encompass floated child elements. This issue is akin to collapsing margins but is often more deceptive, causing entire boxes to collapse unexpectedly.

A BFC is formed when an HTML box satisfies at least one of the following criteria:

- The

floatproperty is set to a value other thannone. - The

positionproperty is assigned a value that is neitherstaticnorrelative. - The

displayproperty is set totable-cell,table-caption,inline-block,flex,inline-flex,grid, orinline-grid. - The

overflowproperty is set to a value other thanvisible.

Within a BFC, each box's left outer edge aligns with the left edge of its containing block (or the right edge in right-to-left layouts). Additionally, vertical margins between adjacent block-level boxes within a BFC collapse into a single margin.

Discover Block Formatting Context (BFC) and its Operation on GreatFrontEnd

42. What is

z-indexand How is a Stacking Context Created?The

z-indexproperty in CSS manages the vertical stacking order of overlapping elements. It only influences positioned elements—those with apositionvalue other thanstatic—and their descendants or flex items.In the absence of a

z-indexvalue, elements stack based on their order in the Document Object Model (DOM), with elements appearing later in the HTML markup rendered on top of earlier ones at the same hierarchy level. Positioned elements (those with non-static positioning) and their children will always overlay elements with default static positioning, regardless of their order in the HTML structure.A stacking context is essentially a group of elements that share a common stacking order. Within a local stacking context, the

z-indexvalues of child elements are relative to that context rather than the entire document. Elements outside of this context—such as sibling elements of a local stacking context—cannot interpose between layers within it. For instance, if element B overlays element A, a child of element A, element C, cannot surpass element B in the stacking order even if it has a higherz-indexthan element B.Each stacking context operates independently; after stacking its child elements, the entire context is treated as a single entity within the parent stacking context's order. Certain CSS properties, like an

opacityless than 1, afilterthat isn'tnone, or atransformthat isn'tnone, can trigger the creation of a new stacking context.Learn about

z-indexand Stacking Contexts on GreatFrontEnd43. How Does a Browser Match Elements to a CSS Selector?

This topic relates to writing efficient CSS, specifically how browsers interpret and apply CSS selectors. Browsers process selectors from right to left, starting with the most specific (the key selector) and moving outward. They first identify all elements that match the rightmost part of the selector and then traverse up the DOM tree to verify if those elements meet the remaining parts of the selector.

For example, consider the selector

p span. Browsers will first locate all<span>elements and then check each span's ancestor chain to determine if it is within a<p>element. Once a<p>ancestor is found for a given<span>, the browser confirms that the<span>matches the selector and ceases further traversal for that element.The efficiency of selector matching is influenced by the length of the selector chain—the shorter the chain, the quicker the browser can verify matches.

Understand How Browsers Match CSS Selectors on GreatFrontEnd

44. What is the Box Model in CSS and How Can You Control Its Rendering?

The CSS box model is a fundamental concept that describes the rectangular boxes generated for elements in the document tree, determining how they are laid out and displayed. Each box comprises a content area (such as text or images) surrounded by optional

padding,border, andmarginareas.The box model is responsible for calculating:

- The total space a block element occupies.

- Whether borders and margins overlap or collapse.

- The overall dimensions of a box.

Box Model Rules

- Dimensions Calculation: A block element's size is determined by its

width,height,padding, andborder. - Automatic Height: If no

heightis specified, a block element's height adjusts to its content pluspadding(unless floats are involved). - Automatic Width: If no

widthis set, a non-floated block element expands to fit its parent's width minuspadding, unless amax-widthis specified.- Certain block-level elements like

table,figure, andinputhave inherent width values and may not expand fully. - Inline elements like

spando not have a default width and will not expand to fit.

- Certain block-level elements like

- Content Dimensions: An element's

heightandwidthare determined by its content. - Box-Sizing: By default (

box-sizing: content-box),paddingandborderare not included in an element'swidthandheight.

Note: Margins do not contribute to the actual size of the box; they affect the space outside the box. The box's area is confined to the

borderand does not extend into themargin.Additional Considerations

Understanding the

box-sizingproperty is crucial as it alters how an element'sheightandwidthare calculated.box-sizing: content-box: The default behavior where only the content size is considered.box-sizing: border-box: Includespaddingandborderin the element's totalwidthandheight, excludingmargin.

Many CSS frameworks adopt

box-sizing: border-boxglobally for a more intuitive sizing approach.Explore the Box Model and Its Control in CSS on GreatFrontEnd

45. How Do You Utilize the CSS

displayProperty? Provide Examples.The

displayproperty in CSS dictates how an element is rendered in the document flow. Common values includenone,block,inline,inline-block,flex,grid,table,table-row,table-cell, andlist-item.Description:

noneHides the element; it does not occupy any space in the layout. All child elements are also hidden. The element is treated as if it does not exist in the DOM.

blockThe element occupies the full width available, starting on a new line.

inlineThe element does not start on a new line and only occupies as much width as necessary.

inline-blockCombines characteristics of both

inlineandblock. The element flows with text but can havewidthandheightset.flexDefines the element as a flex container, enabling the use of flexbox layout for its children.

gridDefines the element as a grid container, allowing for grid-based layout of its children.

tableMakes the element behave like a

<table>element.table-rowMakes the element behave like a

<tr>(table row) element.table-cellMakes the element behave like a

<td>(table cell) element.list-itemMakes the element behave like a

<li>(list item) element, enabling list-specific styling such aslist-style-typeandlist-style-position.For a comprehensive list of

displayproperty values, refer to the CSS Display | MDN.Understand the CSS

displayProperty with Examples on GreatFrontEnd46. How Do

relative,fixed,absolute,sticky, andstaticPositioning Differ?In CSS, an element's positioning is determined by its

positionproperty, which can be set torelative,fixed,absolute,sticky, orstatic. Here's how each behaves:-

static: The default positioning. Elements flow naturally within the document. Thetop,right,bottom,left, andz-indexproperties have no effect. -

relative: The element is positioned relative to its normal position. Adjustments usingtop,right,bottom, orleftmove the element without affecting the layout of surrounding elements, leaving a gap where it would have been. -

absolute: The element is removed from the normal document flow and positioned relative to its nearest positioned ancestor (an ancestor with apositionother thanstatic). If no such ancestor exists, it positions relative to the initial containing block. Absolutely positioned elements do not affect the position of other elements and can havewidthandheightspecified. -

fixed: Similar toabsolute, but the element is positioned relative to the viewport, meaning it stays in the same place even when the page is scrolled. -

sticky: A hybrid ofrelativeandfixed. The element behaves likerelativeuntil it crosses a specified threshold (e.g., scroll position), after which it behaves likefixed, sticking to its position within its parent container.

Understanding these positioning schemes is vital for controlling the layout and behavior of elements, especially in responsive and dynamic designs.

Learn About Positioning Schemes in CSS on GreatFrontEnd

47. What Should You Consider When Designing for Multilingual Websites?

Designing and developing for multilingual websites involves various considerations to ensure accessibility and usability across different languages and cultures. This process is part of internationalization (i18n).

Search Engine Optimization (SEO)

- Language Attribute: Use the

langattribute on the<html>tag to specify the page's language. - Locale in URLs: Include locale identifiers in URLs (e.g.,

en_US,zh_CN). - Alternate Links: Utilize

<link rel="alternate" hreflang="other_locale" href="url_for_other_locale">to inform search engines about alternate language versions of the page. - Fallback Pages: Provide a fallback page for unmatched languages using

<link rel="alternate" href="url_for_fallback" hreflang="x-default" />.

Locale vs. Language

- Locale: Controls regional settings like number formats, dates, and times, which may vary within a language.

- Language Variations: Recognize that widely spoken languages have different dialects and regional variations (e.g.,

en-USvs.en-GB,zh-CNvs.zh-TW).

Locale Prediction and Flexibility

- Automatic Detection: Servers can detect a visitor's locale using HTTP

Accept-Languageheaders and IP addresses. - User Control: Allow users to easily change their preferred language and locale settings to account for inaccuracies in automatic detection.

Text Length and Layout

- Variable Lengths: Be aware that translations can alter text length, potentially affecting layout and causing overflow issues.

- Design Flexibility: Avoid rigid designs that cannot accommodate varying text lengths, especially for headings, labels, and buttons.

Reading Direction

- Left-to-Right (LTR) vs. Right-to-Left (RTL): Accommodate different text directions, such as Hebrew and Arabic, by designing flexible layouts that can adapt to both LTR and RTL orientations.

Avoid Concatenating Translated Strings

-

Dynamic Content: Instead of concatenating strings (e.g.,

"The date today is " + date), use template strings with parameter substitution to accommodate different grammar structures across languages.Example:

// Englishconst message = `I will travel on ${date}`;// Chineseconst message = `我会在${date}出发`;

Formatting Dates and Currencies

- Regional Formats: Adapt date and currency formats to match regional conventions (e.g., "May 31, 2012" in the U.S. vs. "31 May 2012" in Europe).

Text in Images

- Scalability Issues: Avoid embedding text within images, as it complicates translation and accessibility. Use text elements styled with CSS instead to allow for easier localization.

Cultural Perceptions of Color

- Color Sensitivity: Be mindful that colors can carry different meanings and emotions across cultures. Choose color schemes that are culturally appropriate and inclusive.

Understand Multilingual Design Considerations on GreatFrontEnd

48. How Do

block,inline, andinline-blockDisplay Types Differ?The